Last week, I shared an article about Amazon's "Just Walk Out" technology – and how it likely required a team of human validators and data labelers.

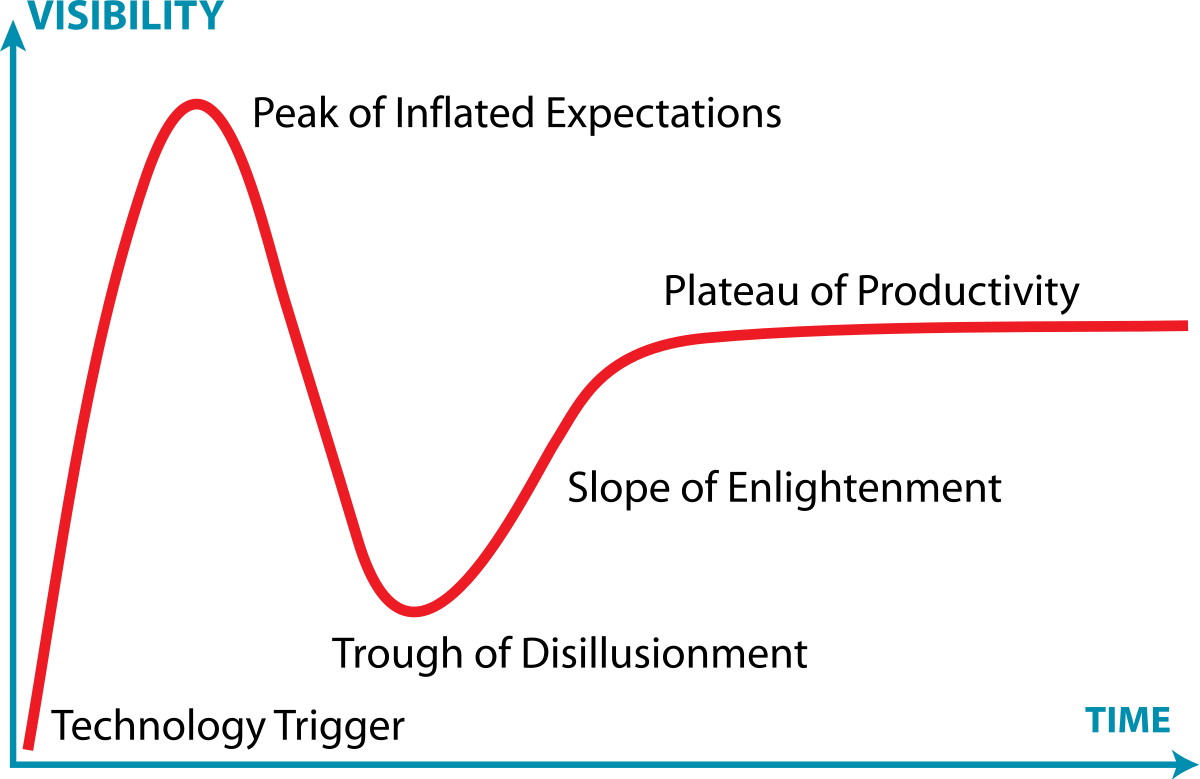

My takeaway from the article was that we're right at the peak of inflated expectations and about to enter the trough of disillusionment.

Gartner via Wikipedia

One of my friends sent me this video, which he found in response.

via Sasha Yanshin

It's a pretty damning video from someone who is frustrated with AI - but it makes several interesting points. The presenter discusses Amazon's recent foible, Google's decreasing search quality, the increase of poorly written AI-crafted articles, GPTs web-scraping scandals, and the overall generalization of responses we see as everyone uses AI everywhere.

Yanshin attributes the disparity between the actual results and the excitement surrounding AI stocks to the substantial investments from technology giants. But as most bubbles prove, money will be the catalyst for amazing things — and some amazing failures and disappointments too.

His final takeaway is that, regardless of its current state, AI is coming and will undoubtedly improve our lives.

If I were to add some perspective from someone in the industry, it would be this.

AI Is Overdelivering in Countless Ways

There will always be a gap between expectations and reality (because there will always be a gap between the hype and adoption cycles). AI is already seamlessly integrated into your life. It's the underpinning of your Smartphones, Roombas, Alexas, Maps, etc. It has also massively improved supply chain management, data analytics, and more.

That's not what gets media coverage ... because it's not sexy ... even if it's real.

OverHype has existed for much longer than AI has been in the public eye. An easy example is the initial demo of the iPhone, which was almost totally faked,

Having created AI since arguably the mid-90s, the progress and capabilities of AI today are hard to believe. They're almost good enough to seem like science fiction.

The Tool Isn't Usually The Problem

Artificial Intelligence is not a substitute for the real thing—and it certainly can't compensate for the lack of the real thing.

I sound like a broken record, but AI is a tool, not a panacea. Misusing it, like using a shovel as a hammer, leads to disappointment. And it doesn't help if you're trying to hammer nails when you should be laying bricks.

ChatGPT is very impressive, as are many other generative AI tools. However, they're still products of the data used to train them. They won't make sure they give you factual information; they can only write their responses based on the data they have.

If you give an AI tool a general prompt, you'll likely get a general answer. Crafting precise prompts increases their utility and can create surprising results.

Even if AI independently achieves 80% of the desired outcome, it still did it without a human, a salary, or hours and days of time to create it.

Unfortunately, if you're asking the wrong questions, the answers still won't help you.

That's why it matters not only that you use the right tool but also that you use it to solve the right problem. In addition, many businesses lose sight of the issues they're solving because they get distracted by bright and shiny new opportunities.

Conclusion

Sifting the wheat from the chaff has become more complicated — and not just in AI. Figuring out what news is real, who to trust, and what companies won't misuse your data seems like it has almost become a full-time job.

If you take the time, you will see a lot of exciting progress.

Public perception is likely to trend downward in the next news cycle, which is to be expected. After the peak of inflated expectations comes the trough of disillusionment.

Regardless, AI will continue to become more capable, ubiquitous, and autonomous. The question is only how long until it affects your business and industry.

What's the most exciting technology you've seen recently?

A Few Graphs On The State of AI in 2024

Every year, Stanford puts out an AI Index1 with a massive amount of data attempting to sum up the current state of AI.

In 2022, it was 196 pages; last year, it was 386; now, it’s over 500 ... The report details where research is going and covers current specs, ethics, policy, and more.

It is super nerdy ... yet, it’s probably worth a skim (or ask one of the new AI services to summarize the key points, put it into an outline, and create a business strategy for your business from the items that are likely to create the best sustainable competitive advantages for you in your industry).

For reference, here are my highlights from 2022 and 2023.

AI (as a whole) received less private investment than last year - despite an 8X funding increase for Generative AI in the past year.

Even with less private investment, progress in AI accelerated in 2023.

We saw the release of new state-of-the-art systems like GPT-4, Gemini, and Claude 3. These systems are also much more multimodal than previous systems. They’re fluent in dozens of languages, can process audio and video, and even explain memes.

So, while we’re seeing a decrease in the rate at which AI gets investment dollars and new job headcount, we’re starting to see the dam overflow. The groundwork laid over the past few years is paying dividends. Here are a few things that caught my eye and might help set some high-level context for you.

Technological Improvements In AI

via AI Index 2024

Even since 2022, the capabilities of key models have increased exponentially. LLMs like GPT-4 and Gemini Ultra are very impressive. In fact, Gemini Ultra became the first LLM to reach human-level performance on the Massive Multitask Language Understanding (MMLU) benchmark. However, there’s a direct correlation between the performance of those systems and the cost to train them.

The number of new LLMs has doubled in the last year. Two-thirds of the new LLMs are open-source, but the highest-performing models are closed systems.

While looking at the pure technical improvements is important, it’s also worth realizing AI’s increased creativity and applications. For example, Auto-GPT takes GPT-4 and makes it almost autonomous. It can perform tasks with very little human intervention, it can self-prompt, and it has internet access & long-term and short-term memory management.

Here is an important distinction to make … We’re not only getting better at creating models, but we’re getting better at using them. Meanwhile, the models are getting better at improving themselves.

The Proliferation of AI

First, let’s look at patent growth.

via AI Index 2024

The adoption of AI and the claims on AI “real estate” are still increasing. The number of AI patents has skyrocketed. From 2021 to 2022, AI patent grants worldwide increased sharply by 62.7%. Since 2010, the number of granted AI patents has increased more than 31 times.

As AI has improved, it has increasingly forced its way into our lives. We’re seeing more products, companies, and individual use cases for consumers in the general public.

While the number of AI jobs has decreased since 2021, job positions that leverage AI have significantly increased.

As well, despite the decrease in private investment, massive tranches of money are moving toward key AI-powered endeavors. For example, InstaDeep was acquired by BioNTech for $680 million to advance AI-powered drug discovery, Cohere raised $270 million to develop an AI ecosystem for enterprise use, Databricks bought MosaicML for 1.3 Billion, and Thomson Reuters acquired Casetext - an AI legal assistant.

Not to mention the investments and attention from companies like Hugging Face, Microsoft, Google, Bloomberg, Adobe, SAP, and Amazon.

Ethical AI

via AI Index 2024

Unfortunately, the number of AI misuse incidents is skyrocketing. And it’s more than just deepfakes, AI can be used for many nefarious purposes that aren’t as visible, on top of intrinsic risks, like with self-driving cars. A global survey on responsible AI highlights that companies’ top AI-related concerns include privacy, data security, and reliability.

When you invent the car, you also invent the potential for car crashes ... when you ‘invent’ nuclear energy, you create the potential for nuclear weapons.

There are other potential negatives as well. For example, many AI systems (like cryptocurrencies) use vast amounts of energy and produce carbon. So, the ecological impact has to be taken into account as well.

Luckily, many of today’s best minds are focused on creating bumpers to rein in AI and prevent and discourage bad actors. The number of AI-related regulations has risen significantly, both in the past year and over the last five years. In 2023, there were 25 AI-related regulations, a stark increase from just one in 2016. Last year, the total number of AI-related regulations grew by 56.3%. Regulating AI has become increasingly important in legislative proceedings across the globe, increasing 10x since 2016.

Not to mention, US government agencies allocated over $1.8 billion to AI research and development spending in 2023. Our government has tripled its funding for AI since 2018 and is trying to increase its budget again this year.

Conclusion

Artificial Intelligence is inevitable. Frankly, it’s already here. Not only that ... it’s growing, and it’s becoming increasingly powerful and impressive to the point that I’m no longer amazed by how amazing it continues to become.

Despite America leading the charge in AI, we’re also among the lowest in positivity about the benefits and drawbacks of these products and services. China, Saudi Arabia, and India rank the highest. Only 34% of Americans anticipate AI will boost the economy, and 32% believe it will enhance the job market. Significant demographic differences exist in perceptions of AI’s potential to enhance livelihoods, with younger generations generally more optimistic.

We’re at an interesting inflection point where fear of repercussions could derail and diminish innovation - slowing down our technological advance.

Much of this fear is based on emerging models demonstrating new (and potentially unpredictable) capabilities. Researchers showed that these emerging capabilities mostly appear when non-linear or discontinuous metrics are used ... but vanish with linear and continuous metrics. So far, even with LLMs, intrinsic self-correction has shown to be very difficult. When a model is left to decide on self-correction without guidance, performance declines across all benchmarks.

If we don’t continue to lead the charge, other countries will … you can already see it with China leading the AI patent explosion.

We need to address the fears and culture around AI in America. The benefits seem to outweigh the costs – but we have to account for the costs (time, resources, fees, and friction) and attempt to minimize potential risks – because those are real (and growing) as well.

Pioneers often get arrows in their backs and blood on their shoes. But they are also the first to reach the new world.

Luckily, I think momentum is moving in the right direction. Last year, it was rewarding to see my peers start to use AI apps. Now, many of them are using AI-inspired vocabulary and thinking seriously about how best to adopt AI into the fabric of their business.

We are on the right path.

Onwards!

1Nestor Maslej, Loredana Fattorini, Raymond Perrault, Vanessa Parli, Anka Reuel, Erik Brynjolfsson, John Etchemendy, Katrina Ligett, Terah Lyons, James Manyika, Juan Carlos Niebles, Yoav Shoham, Russell Wald, and Jack Clark, “The AI Index 2024 Annual Report,” AI Index Steering Committee, Institute for Human-Centered AI, Stanford University, Stanford, CA, April 2024. The AI Index 2024 Annual Report by Stanford University is licensed under Attribution-NoDerivatives 4.0 International.

Posted at 05:33 PM in Business, Current Affairs, Gadgets, Ideas, Market Commentary, Science, Trading, Trading Tools, Web/Tech | Permalink | Comments (0)

Reblog (0)