When I was a child, NASA got to the Moon with computers much less sophisticated than those we now keep in our pockets.

At that time, when somebody used the term "computers," they were probably referring to people doing math. The idea that businesses or individuals would use computing devices, as we do now, was far-fetched science fiction.

Recently, I shared an article on the growing "compute" calculations used in machine learning. We showed that the amount of compute used in machine learning has doubled every six months since 2010, with today's largest models using datasets up to 1,900,000,000,000 points.

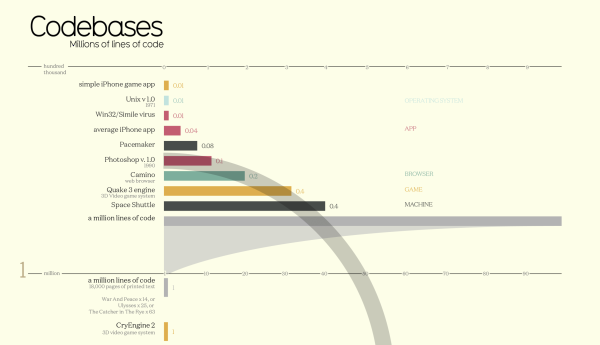

This week, I want to take a look at lines of code. Think of that as a loose proxy showing how sophisticated software is becoming.

As you go through the chart, you'll see that in the early 2000's we had software with up to approximately twenty-five million lines of code. Meanwhile, today, the average car uses one hundred million, and Google uses two billion lines of code across their internet services.

For context, if you count DNA as code, the human genome has 3.3 billion lines of code. So, while technology has increased massively – we're still not close to emulating the complexity of humanity.

Another thing to consider is that when computers had tighter memory constraints, coders had to be deliberate about how they used each line of code or variable. They found hacks and workarounds to make a lot out of a little.

However, with an abundance of memory and processing power, software can get bloated as lazy (or lesser) programmers get by with inefficient code. Consequently, not all the increase in size results from increasing complexity – some of it is the result of lackadaisical programming or more forgiving development platforms.

In better-managed products, they consider whether the code is working as intended as well as reasonable resource usage.

In our internal development, we look to build modular code that allows us to re-use equations, techniques, and resources. We look at our platform as a collection of evolving components.

As the cost and practicality of bigger systems become more manageable, we can use our intellectual property assets differently than before.

For example, a "trading system" doesn't have to trade profitably to be valuable anymore. It can be used as a "sensor" that generates useful information for other parts of the system. It helps us move closer to what I like to call, digital omniscience.

As a result of increased capabilities and capacities, we can use older and less capable components to inform better decision-making.

In the past, computing constraints limited us to use only our most recent system at the highest layer of our framework.

We now have more ways to win.

But, bigger isn't always better – and applying constraints can encourage creativity.

Nonetheless, as technology continues to skyrocket, so will the applications and our expectations about what they can do for us.

We live in exciting times … Onwards!

via

via

Leave a Reply