I often talk about Machine Learning and Artificial Intelligence in broad strokes. Part of that is based on me – and part of that is a result of my audience. I tend to speak with entrepreneurs (rather than data scientists or serious techies). So talking about training FLOPs, parameters, and the actual benchmarks of ML is probably outside of their interest range.

But, every once in a while, it's worth taking a look into the real tangible progress computers have been making.

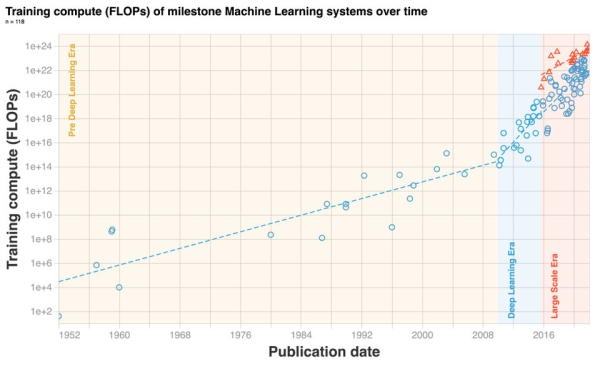

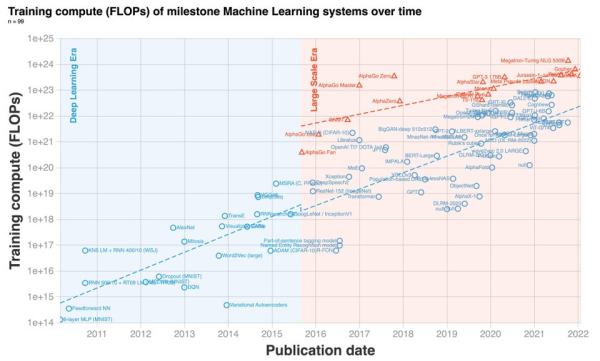

Less Wrong put together a great dataset on the growth of machine learning systems between 1952 and 2021. While there are many variables that are important in judging the performance and intelligence of systems, their dataset focuses on parameter count. It does this because it's easy to find data that is also a reasonable proxy for model complexity.

Giuliano Giacaglia and Less Wrong (click here for an interactive version)

One of the simplest takeaways is that ML training compute has been doubling basically every six months since 2010. Compared to Moore's Law, where compute power doubled every two years, we're radically eclipsing that. Especially as we've entered a new era of technology.

Now, to balance this out, we have to ask the question, what actually makes AI intelligent? Model size is important, but you also have factors like training compute and training dataset size. You also must consider the actual results that these systems produce. As well, model size isn't a 1-t0-1 with model complexity as architectures and domains have different inputs and needs (but can have similar sizes).

A few other brief takeaways are that language models have seen the most growth, while gaming models have the fewest trainable parameters. This is somewhat counterintuitive at first glance, but makes sense as the complexity of games means that they have more constraints in other domains. If you really get into the data, there are plenty more questions and insights to be had. But, you can learn more from either Giancarlo or Less Wrong.

And, a question to leave with is whether the scaling laws of machine learning will differ as deep learning become more prevalent. Right now, model size comparisons suggest not, but there are so many other metrics to consider.

What do you think is going to happen?

Doom And Gloom From Charlie Munger

In case inflation wasn't stressing you out enough, Berkshire Hathaway's Vice Chairman, Charlie Munger, channeled Nouriel Roubini in his tirade on the dangers of inflation.

via Yahoo Finance

For context, the CPI has inflation rising at the fastest rate in over 40 years. Meanwhile, Munger's current working hypothesis is that our currency will become worthless over the next hundred years.

Munger paints a dire picture - but there are a lot of "what-ifs." The infusion of cash into the economy during the pandemic certainly pushed us in a dangerous direction (despite saving the economy). However, 100 years is a long time, and there are many steps we can take as an economy to slow that snowball. One of those includes the continuing scale of innovation. As new technologies arise, and the value chain of industries changes, so does the economics of our nation.

Posted at 07:58 PM in Business, Current Affairs, Ideas, Market Commentary, Trading | Permalink | Comments (0)

Reblog (0)