At the core of Capitalogix's existence is a commitment to systemization and automation.

Consequently, I play with a lot of tools. I think of this as research, discovery, and skill-building. There is a place for that in my day or week. However, few of those tools make it into my real work routine.

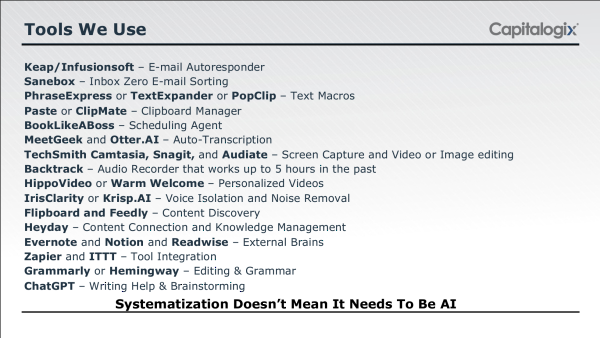

Here is a list of some of the tools that I recommend. I'm not saying I use them regularly ... but in some cases, I should (it reminds me of some exercise equipment I have).

Since the late 90's, I've been collecting tools to make my business more efficient and my life easier.

It's a little embarrassing, but my most popular YouTube video is an explainer video on Dragon NaturallySpeaking from 13 years ago. It was (and still is) dictation software, but from a time before your phone gave you that capability.

Today, I have more tools than I know what to do with, but here are a few that keep coming up in conversations.

Daily.AI - AI Newsletters

We've launched a new newsletter that is AI-curated by Daily.ai. It matches the tone and style of my newsletters, gets good engagement, and (honestly) looks much better than what we put out.

Our handwritten newsletters still do better on some metrics - but it's a nice addition and a promising technology.

For transparency, our handwritten newsletters get around 45% opens, and 10% click-throughs and our new AI newsletter is averaging around 38% opens but around 30% click-throughs. Our normal newsletter isn't focused on links - which is why the click-through isn't as good.

That said, Daily.AI is a great tool that creates compelling two-way communication with your audience way easier and cheaper (time, money, and effort) than something similar done manually.

This is an example of how people won't get replaced by AI ... people will get replaced by people who use AI better.

Opus.pro - AI Video Repurposing

Opus.pro takes your long-form video content and cuts it into short-form content that you can post as teasers to other channels like YouTube Shorts, TikTok, or Instagram Reels.

For me, I tend to do more long-form content naturally. I go on a podcast, speak at a conference, or to a mastermind group. I end up with a 30-minute+ video that I don't have the time or interest in using. This tool allows me to find the best parts quickly - and still allows editing to make it perfect.

For someone interested in really pursuing video, it's not worth it. If you're interested in being present online, and not worried about making it your career, this is a great tool to streamline and systematize your process.

Type.AI

Type.AI is an interesting AI-first document editing tool. At its core, Type is a faster, better, and easier way to write.

A lot of people are using chat GPT for some editing. Type is a good example of a next-generation tool that incorporates ChatGPT and other LLMs under the surface. It is aware of what you are doing and lets you know what it can do.

Type jumpstarts the creative process and banishes the blank page. Underneath its gorgeous UI are powerful features for generating ideas, querying your document, experimenting with different models, and easily formatting your work.

The point is that with a tool like this, you don't have to be good at prompt engineering. The tool does that for you – so you can focus on the writing.

GetVoila.AI

Voilà is an all-in-one AI assistant in a browser extension. That means it goes with you everywhere you go on the web and supercharges your browser by making it use ChatGPT to do what it does best without you having to do more than check what is available.

Voilà simplifies the process of working with the content of websites and URLs, making it easy to convert them into various types of content, summarize them, or extract key information. For example, if I am watching a YouTube video, I can right-click and choose "Summarize" - it creates a short video summary from the transcript. Or, while writing this sentence, a simple right-click lets me choose: Improve, Fix grammar, Make longer, Make shorter, Summarize, Simplify, Rephrase, or Translate.

This is more useful than I thought. I use it well ... then forget about it. When I use it again, I often find that it got better. I think you will find that with a lot of the tools these days.

Don't worry about how well you use tools like this. It is enough that you get better at using tools like these to accomplish what you really want.

Explore a little. Then, let me know what you found worth sharing.

Here Are Some Links For Your Weekly Reading - October 19th, 2024

Here are some of the posts that caught my eye recently. Hope you find something interesting.

Lighter Links:

Trading Links:

Posted at 03:56 PM in Art, Books, Business, Current Affairs, Gadgets, Healthy Lifestyle, Ideas, Just for Fun, Market Commentary, Personal Development, Religion, Science, Sports, Trading, Trading Tools, Web/Tech | Permalink | Comments (0)

Reblog (0)