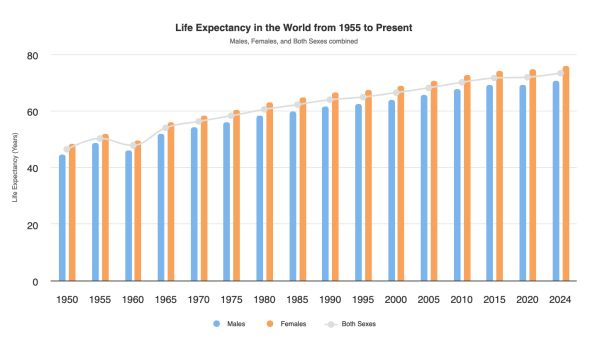

Life expectancy has been on a steady global rise for longer than I've been alive.

via worldometers

Meanwhile ... the United States has fallen to 48th on the list of countries with the highest life expectancy.

Hong Kong tops the list with an average life expectancy of 85.63 overall - and 88.26 years for females.

For comparison, the U.S.'s average life expectancy is only 79.46.

Many factors potentially impact the findings, for example, the average height and weight of a population (with shorter & lighter people tending to live longer), diet, healthcare system, and work/life balance.

While some of this is out of your control (OK, a lot of it is) - there are definitely things you can do to increase your healthy lifespan. Meanwhile, some people like Bryan Johnson are doing everything they can to live forever.

Popular Mechanics put together a video series called How to Live Forever, or Die Trying, where they interview scientists and anti-aging gurus to give you insight into pursuing a future without death.

Unfortunately, recent science has shown that adults in their mid-40s to early 60s begin to experience significant changes in their alcohol, caffeine, and lipid metabolism, an increase in risk of cardiovascular disease, and a noticeable decrease in their skin and muscle health. When you hit your 60s, you also begin to see negative changes in carbohydrate metabolism, immune regulation, kidney function, and a further decline in the previously mentioned factors.

Here's the good news. Not only is science and technology getting better, but you're always in control. You can make lifestyle changes to increase your longevity, and you can also find supplements, treatments, and protocols that can reverse those factors of aging. Even simple measures like increasing your physical activity or avoiding alcohol before bed can make a massive difference.

They say a healthy person has thousands of dreams, but an unhealthy person only has one.

That is one of the reasons I spend so much time and energy thinking about staying healthy, fit, and vital.

Focusing on the positive is important ... But to extend your healthy lifespan, you have to start by telling the truth and finding out what you and your body struggle with the most.

A doctor friend gave me some advice. He said it doesn't matter if you are on top of 9 out of 10 things ... it's the 10th that kills you.

Despite our best efforts, Mother Nature remains undefeated.

With that said, here are some of my previous articles on longevity and health:

The goal isn't just to stay alive longer; it's to live life to its fullest for as long as possible.

I recently joined a fantastic mastermind group called DaVinci 50, run by Lisa and Richard Rossi. It brings together a remarkable collection of medical professionals and entrepreneurs focused on the latest research, treatments, and opportunities in health and longevity.

Another great tool I rely on is Advanced Body Scan. Early detection is crucial, but so is tracking the history of your scans to monitor changes over time. In my opinion, the most valuable scan is always the next one.

Additionally, I use a growing list of trackers and biometric devices to measure my heart rate, along with apps and tools for mindfulness, breathwork, and journaling. Together, these practices recognize that mind, body, and spirit combine to define how you live your life.

To end this post, I'll use a farewell phrase I heard often while growing up ... it translates roughly to "go in health, come in health, and be healthy." It's a beautiful way to wish someone well on their journey, emphasizing the importance of health and well-being.

I hope you found something interesting. Let me know what things and practices work best for you.

Pattern Recognition In Trading

The Market has been volatile recently, with unusually large gains and losses as we enter the homestretch of the election season. Even though many markets are still near their highs, I'm sensing an increase in anxiety and fear in many of my peers.

While some believe that markets are random, others make money using rule-based trading systems that rely on specific patterns to identify favorable trading conditions.

Traders, at every level, search for a tradable edge. Some find it in fundamental analysis, others in technical analysis or chart-based patterns, while still others rely on an algorithmic or execution-based edge.

So, is there some magic unifying equation that defines the Market? Personally, I doubt it. Even though nothing always works, "something" always works in the markets. The challenge is to identify what that is and to ignore the rest.

Though many patterns work, from time to time, when a particular pattern works may seem random, and here is why.

Understanding the Markets.

There is no such thing as a "Market" ... It is a collection of separate traders (each trading based on what they focus on, what they make it mean, and ultimately what they decide to do).

As a result, one of the reasons that markets experience volatility is that different groups buy or sell for different reasons at different times.

Consequently, even if one group trades using a consistent set of rules, a strategy that effectively combats it only works until that group stops trading those rules.

It works the other way too. If a large trader imposes their will, it changes the playing field for smaller traders.

Elephants Leave Tracks.

Smart traders follow the big money.

Large traders like governments, sovereign wealth funds, or mutual funds can affect markets while they buy or sell.

However, when they're done, some other group's strategy becomes the dominant force.

Experienced traders recognize that it is important to understand "who is in control" ... but not necessarily why they are trading.

That means you don't have to figure out every bit of information or rationale behind a strategy to make money. For example, suppose you were about to walk into a movie theater but were suddenly confronted with hundreds of people running in the other direction screaming. In that case, you don't have to understand precisely why it's happening to respond intelligently.

On a superficial level, that's the basis of trend following. It is also an example of pattern recognition.

Most hedge funds now use some form of pattern recognition in their trading systems.

Much of the analysis done to get a trading edge is simply a way to identify "who is in control" and what they are doing ... rather than why they are trading.

Here, we will examine why some traders rely on specific patterns to identify favorable trading conditions.

Some Patterns Are Logical.

Let's look at a common trading pattern called a "Triangle". You can think of the Triangle as a well-contested battle between the bulls and the bears. It is almost like an arm-wrestling match. Inside the pattern, neither side gives up much ground. However, when one side loses conviction, the market surges in the direction the winners push.

Here is a picture of a Triangle and the pattern's likely price projection.

Triangles are an example of a logical pattern. It is easy to see and easy to understand. In addition, it is easy for a trader to use a setup like this to define the likely risk and reward of a trade they are considering.

Why Do Patterns Form in Markets Repeatedly? The Answer is Human Nature.

Markets are not always logical. Some would argue that Markets are rarely logical. If they were, intelligent people would get rich by following their instincts ... but that isn't how it works.

On some level, markets represent their participants' collective thoughts and emotions. So, even though conditions change, the collective response to fear and greed remains reasonably similar.

As a result, many patterns show up in market price data.

In General, Here's What Is Happening.

A move up of a certain degree will be met with some people who fear the move won't go higher ... so they decide to sell. Meanwhile, others will believe the move will trigger a whole different group of people to recognize an opportunity ... so they decide to buy.

The same thing happens with a big move down. At first, it triggers fear and selling. But at some point, to a particular group of traders, the move down will look like a discounted buying opportunity.

At its core, price is the primary indicator of investors' willingness to buy or sell. Things like velocity or slope are secondary, and show the intensity of their motivation.

So, many of the patterns that you read in books or magazines (with names like "head and shoulders", or "cup and handle", or "double bottoms") are all just ways of explaining the natural response to certain conditions.

There is science involved in recognizing a specific pattern ... and art in selecting which pattern to rely on today.

But You Don't Have to Predict Anyone's Action - All It Takes Is An Intelligent Response.

It's the law of large numbers. An insurance company doesn't have to accurately predict when any individual will die; their actuaries have to figure out a reasonable estimate of how many people like that in their risk pool will die during the relevant period ... and price the coverage accordingly. Likewise, in the Market, patterns don't predict what an individual will do; they indicate what the majority will likely do.

So now that you understand patterns, the rest is easy ... right?

Of course, it's not as easy as it sounds because these patterns are being played out across every Market and happen in different time frames as well. That means some people respond to the Market using a much longer time horizon than others. A pattern for them may be noise at a different level of focus.

It may be comforting to see familiar patterns occur whether you're looking at a minute-by-minute chart of the S&P or a weekly chart of gold ... but comfort doesn't make you money. Instead, ask whether what you are looking at is a coincidence or causal. Said another way, does it simply explain what happened, or is it a valid prediction of what will happen?

Since many patterns are playing out across many markets at any given time, a human can't identify, validate, and trade all of them in real-time.

This is where computers and artificial intelligence truly shine. For example, we've developed a pattern mining technology that doesn't rely on traditional technical analysis patterns. Instead, it searches for patterns across various markets and time frames, uncovering edges that humans would never be able to detect on their own.

But even that simply adds more ways to win.

The only thing I can confidently predict is that volatility and noise will increase due to how markets work and the arms race for enhanced technical capabilities and information asymmetry. As volatility and noise continue to rise, what separates smart money from dumb money will likely be the ability to focus on what matters when it matters.

It is hard to do – and even harder to do consistently. But some things are inevitable. While technology may not immediately replace all human traders, it's becoming increasingly evident that those who leverage computers and advanced technology will outperform and eventually replace traders who rely solely on their human capabilities.

We live in interesting times.

Posted at 09:30 PM in Business, Current Affairs, Ideas, Market Commentary, Science, Trading, Trading Tools, Web/Tech | Permalink | Comments (0)

Reblog (0)