Last week, I brought up the concept of Economic Freedom. It reminded me of an idea I last shared in 2008, during the housing crisis.

I noticed how correlated and coordinated worldwide actions were during the housing crisis. During the pandemic, while there was a lot of dissent, there was also a remarkable amount of coordination.

The concept of economic allies presupposes that we also have economic enemies. It’s easy to construct a theory that countries like Russia and China use financial markets to exert leverage in a nascent form of economic warfare.

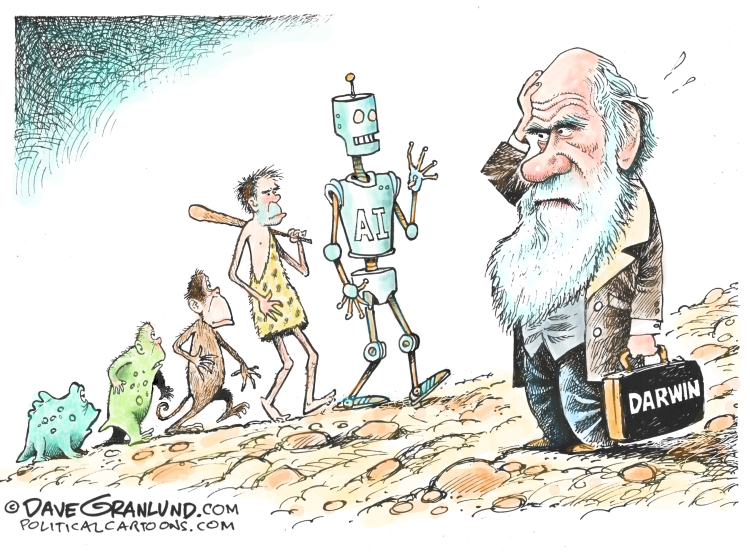

It's easy to come up with a theory that suggests we are our own worst enemies. Our innate fear and greed instincts (and how we react to them) tend to lead us down a path of horrifying consequences. This has been evident in recent years, not just in society, but also in the world of business. I am confident that this pattern will persist in the context of Artificial Intelligence, with both its potential benefits and risks.

The butterfly effect theorizes that a butterfly flapping its wings in Beijing on one day can create or impact a rainstorm over Chicago a few days later. Similarly, in a world with extensive global communication and where automated trading programs (and even toasters) can interact with each other from anywhere across the globe, it is not surprising that market movements are becoming larger, faster, and more volatile.

Perhaps governments cooperate and collaborate because they collectively recognize the need for a new form of protection to mitigate the increasing speed, size, and leverage behind market movements.

And we can also extend this idea to other entities beyond governments. It doesn’t have to be limited to traditional markets either; it can include cryptocurrencies or other emerging technologies as well.

It’s worth understanding the currents, but we must also consider the undercurrents and countercurrents.

Conspiracy theories are rarely healthy or helpful, but maintaining a healthy skepticism is a great survival mechanism.

Hope that helps.

Here Are Some Links For Your Weekly Reading - July 9th, 2023

Here are some of the posts that caught my eye recently. Hope you find something interesting.

Lighter Links:

Trading Links:

Posted at 05:58 PM in Books, Business, Current Affairs, Gadgets, Games, Healthy Lifestyle, Ideas, Just for Fun, Market Commentary, Personal Development, Science, Trading, Trading Tools, Web/Tech | Permalink | Comments (0)

Reblog (0)