Last week, I talked about market performance in 2021. A decent portion of that article talked about cryptocurrency and the recent downturn (after a stellar 2021). I'm a skeptic by nature, so it's hard for me to get behind any specific coin (even Bitcoin) at this point in time.

This week, I had a conversation with good friends, including John Raymonds, about the topic as well. John is much more active in the space and brought up some good points. Something I noticed was how the level of discussion is starting to elevate and mature. People are beginning to think about secondary and tertiary value propositions. The conversation even made me think about repurposing some of our underutilized hardware in our server room for some crypto-related purposes.

So, today, I want to focus on a different aspect of the equation … the potential value propositions of cryptocurrency as a technology – and the blockchain.

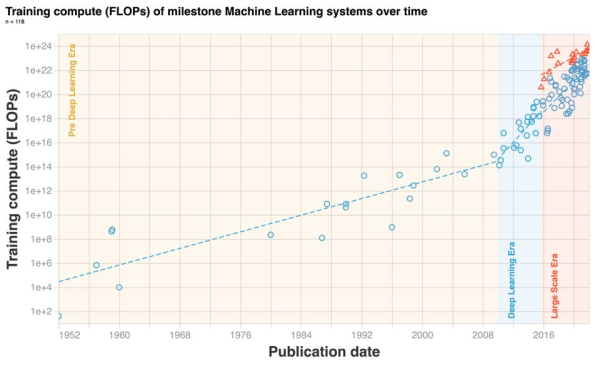

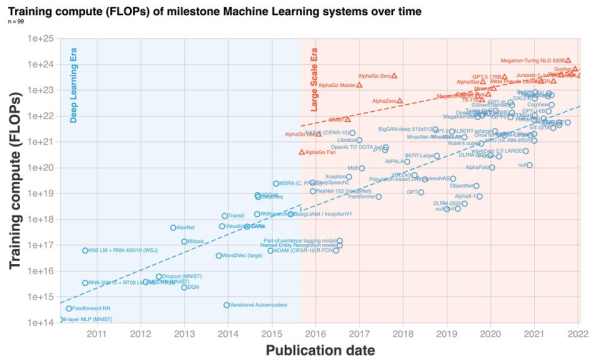

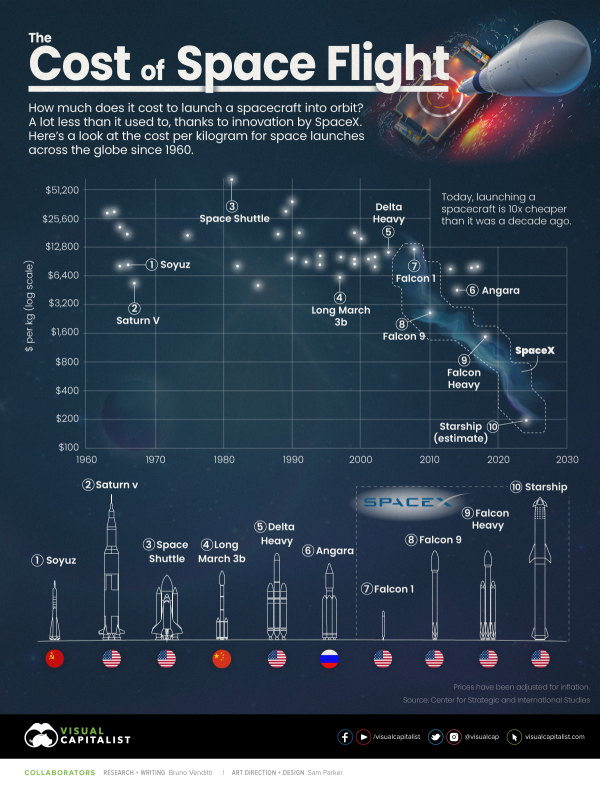

To start, I want to talk about Industrial Revolutions. In part because we're at another inflection point.

A Look at Industrial Revolutions

The Industrial Revolution has two phases: one material, the other social; one concerning the making of things, the other concerning the making of men. - Charles A. Beard

There are several turning points in our history where the world changed forever. Former paradigms and realities became relics of a bygone era.

Tomorrow's workforce will require different skills and face different challenges than we do today. You can consider this the Fourth Industrial Revolution. Compare today's changes to our previous industrial revolutions.

Each revolution shared multiple similarities. They were disruptive. They were centered on technological innovation. They created concatenating socio-cultural impacts.

Since most of us remember the third revolution, let's spend some time on that.

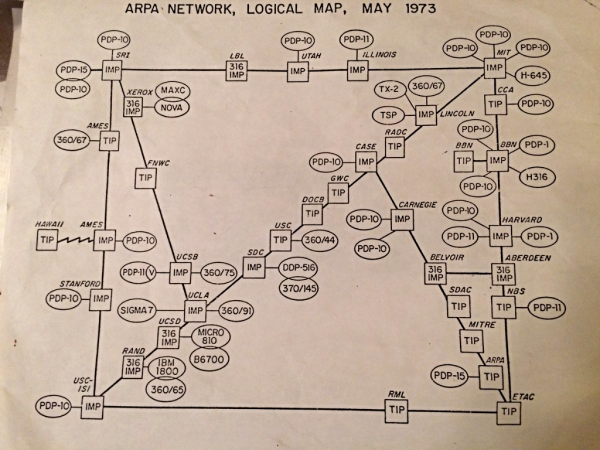

Here's a map of the entire "internet" in 1973.

Reddit via @WorkerGnome.

Most of us didn't use the internet at this point, but you probably remember Web1 (static HTML pages, a 5-minute download to view a 3Mb picture, and of course … waiting for a website to load over the dialup connection before you could read it). It was still amazing.

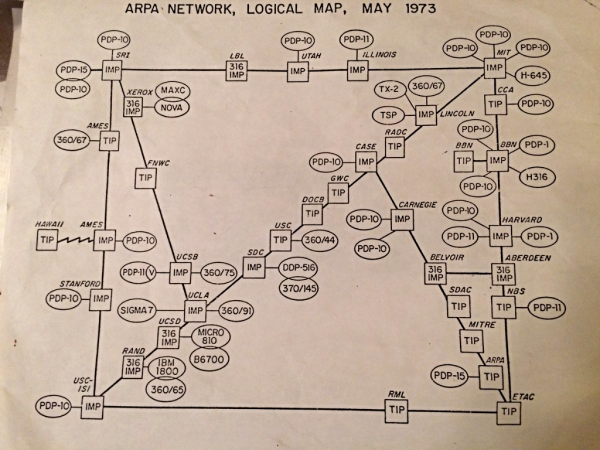

Then Web 2.0 came, and so did everything else we now associate with the internet; Facebook, YouTube, ubiquitous porn sites, Google. But, with Web 2.0 also came user tracking, advertising, and we became the "product." Remember, you're not the customers of those platforms – advertisers are. And if you're not the customer, you're the product. And when you're not the customer, there's no reason for the platforms not to censor your thoughts to control the narrative.

Putting You In Control

Web3 (and the blockchain and its reliant technologies) brings the power back to the people.

Primarily due to decentralized access with equal treatment for everyone. Governments are already being pressured by Bitcoin and other cryptocurrencies. But so are banks and brokerages, due to smart contracts and Ethereum. Soon, even VCs will be impacted due to OHM fork treasuries or initial DEX offerings.

As Web3 gets more mature, so will decentralized finance. Meaning, big banks, governments, ISPs, and more will have less control over the applications and uses of the technology.

If handled correctly, that means competition can discourage the productization of your digital presence.

Removing Barriers

There are many practical ways this would impact your life – but let's look at one that's already happening.

El Salvador recently made Bitcoin legal tender. Talking about all the reasons this happened is beyond the scope of this article, but it does make El Salvador an excellent case study for the possibilities.

To start, it's now easier and quicker to buy a beer there (with Bitcoin) than it is in the US with cash. It also stabilizes pricing in a civil war, because it's easy to move both in and out of the country.

Consequently, it also means that their currency holds its value as they travel to other places.

Let's take this to the extreme. Let's say someone was to convert all their net worth to Bitcoin, and put it in a hardware wallet. They could conceivably memorize their seed phrase, throw the wallet in a fire, and fly to El Salvador with only the clothes on their back. After finding a way to scrounge up the money to buy another hardware wallet through random acts of labor … they would be in a completely new country with their entire net worth and no other footprint. It's scary – especially for governments and their taxing authority. But, it creates a new set of potentials and freedom.

Now, take it a step further. What would the world look like if you had all your health data, insurance, etc., with you anywhere you travel? The world becomes your oyster in a way that was almost impossible before.

And that's only the beginning.

But, to bring it back to my skepticism again, there are a lot of roadblocks, inferences, and time in between today and the decentralization of the internet and finance. And for now, that thought experiment only really works if you're willing to move to El Salvador. The larger countries seem to be doing everything they can to discourage the adoption of cryptocurrencies. Though I think the smaller countries view this as a chance to become one of the new hubs of the world.

However, maybe it's time for this quote by Elon Musk:

"Stop being patient and start asking yourself, how do I accomplish my 10 year plan in 6 months? You will probably fail but you will be a lot further ahead than the person who simply accepted it was going to take 10 years."

via

via  via

via

via

via

Reddit via

Reddit via