Your Brand Style Guide Isn’t Enough Anymore

Not long ago, high-quality art, music, and video had a built-in bottleneck: skill. If you wanted a specific emotional effect—or a certain level of craftsmanship—you either had to earn the craft yourself or hire someone who had.

That bottleneck is dissolving.

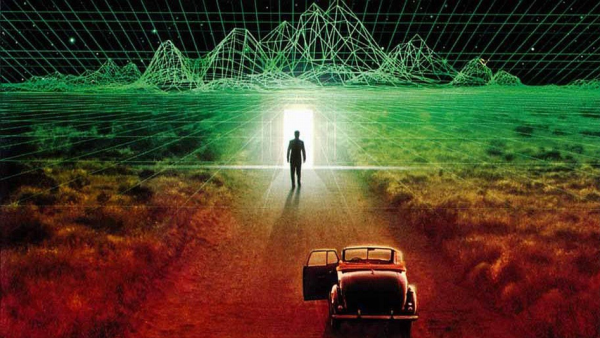

I recently watched an AI-generated music video on YouTube; if I hadn’t paid attention, I might not have known it was entirely created by technology rather than humans. Here’s the link.

Don’t expect to be wowed. I didn’t love the music or the video. But it’s still a notable achievement. For example, recognize how much it feels like a professionally produced music video. While there are some clear limitations in the production, It doesn’t feel like a party trick (even though, technologically, it still is a party trick). It feels like art.

When I first watched it, I remember thinking it reminded me of a slightly older style of music. I couldn’t tell whether the words were Portuguese or Romanian. But I was focused on the little details, rather than its slick production or cool technology.

The singer, Lolita Cercel, is entirely a construct of Tom, a Bacau-based video designer. She doesn’t exist except in AI.

Neither did the music. Tom wanted to convey emotion through his song lyrics, and he decided AI was a powerful tool to turn his thoughts into things.

“I tried to make it as realistic as possible. The inspiration came from an 80-year-old collection of poems by a Romanian author who used colloquial, slum language. I liked the style and adapted it for ‘Lolita’ to make it authentic … It’s a mix of artificial intelligence and classical music. I work on several videos in parallel, shooting, editing, adjusting. Technology has allowed me to bring my ideas to life,

– Tom

That moment matters because the world doesn’t need perfection for the game to change.

When the market believes “you can’t tell,” Whether something was produced by humans or technology, the operating assumptions of media, marketing, and trust start rewriting themselves.

Now, for the sake of this article, I’m not focused on the nature of art and artists. I’m focused on media and the nature of attraction and consumption, particularly in business contexts.

The Skill Shift: From “Making” to “Specifying + Judging”

Until recently, to create something truly captivating, you had to pay the best and the brightest and hope for the best.

It’s only really in the last 20 years that the average business could effectively test an ad before releasing it. Ad agencies hired ‘Mad Men’ savants, and a team of writers, designers, composers, artists, editors, and more, to create a piece that would hopefully stand the test of time … or at least drive some sales.

The new advantage is more subtle — and ultimately more powerful: the ability to specify what you want and judge whether you got it. Often, with a minimal team.

Everyone can watch and react to content. Far fewer can define (clearly and repeatably) what they want to produce in the mind of another human (e.g., trust, reassurance, curiosity, confidence, or urgency). And even fewer can define what “good enough” means (or how they will measure it) before they generate the content.

In a world where production becomes cheap, taste becomes expensive.

From Brand Book to Brand Operating System

Style guides and brand books still matter. Voice, formatting, color choices, visual identity—none of that disappears.

AI changes the game by altering the volume and nature of what gets produced. As people are exposed to more and more content of similar quality and production values, what really changes is the level of what constitutes “average”.

With endless opportunities and distractions, the differentiator becomes consistency: your ability to deliver your promise again and again across channels and formats — without drifting into generic sameness.

That’s where a Brand Operating System comes in.

While a brand book is static, a Brand Operating System is a living specification that reliably turns identity into output and serves as a robust framework for AI initiatives.

A BrandOS includes:

- Audience psychology: what your audience hopes for, fears, rejects, and values

- Proof standards: what they require to trust you (and what triggers skepticism)

- Ambiguity tolerance: how much uncertainty they’ll accept before confidence drops

- Response targets: the emotional outcomes you want to reliably provoke

- Guardrails: what you never do (tone, claims, promises, compliance boundaries)

- A recipe: the variables that make the output recognizably you

Put differently: the BrandOS is how you scale production without losing the signal or the soul of what makes you … you.

“Experience” Is the Product & Feedback Loops Are the Engine

Here’s the thing: in a lot of these markets, results aren’t enough. Everyone can point to returns, claims, outputs—whatever. That stuff commoditizes fast.

What actually sticks is how the system behaves over time. Does it feel consistent? Does it make sense? Do you understand what it’s doing when things go right and when they don’t? That’s where trust comes from.

Under the surface, as AI or technology becomes more advanced, it’s harder for people to understand what it does. That’s why experience itself becomes the differentiator …

Good systems adapt over time. They are not only focused on the immediate outcome. They focus on learning, growing, and adapting to the practical realities of the environment and audience. One way to accomplish that is to use feedback loops to provide the system with better context on what’s happening, how it’s performing, and which areas may need attention or improved data.

I’ve been enjoying an app called Endel lately. It generates music on demand and can link to biometric signals. When I select the “Move” module, it uses data from devices such as an Apple Watch to adjust what it plays. As my pace changes — from walking to jogging — the cadence of the music shifts with me. It feels responsive, as if the system is listening, pacing, or even leading.

That’s the shift: closed-loop generation; generation that adapts to feedback.

We already do this in business:

- In marketing: opens, engagement, retention curves, where people stop watching

- In trading and investing: risk-adjusted targets, volatility stability, whether outcomes reflect skill or luck

A Brand Operating System is what happens when you make those loops explicit, measurable, and repeatable.

“Enough of Me” Has to Be Specified

If you want AI to magnify you instead of replacing you, you have to define what “you” means.

For me, “enough of me” looks like:

- A signature point of view: a high-level perspective of perspectives and what’s possible

- Metaphors: because they compress complexity into something people can carry

- Constructive challenge: not to tear things down, but to test what to trust

Every person and every company has an equivalent set of signature variables—whether they’ve articulated them or not.

If you don’t specify them, the system will default to what it thinks performs. And performance alone often converges on generic engagement rather than authentic resonance.

Guardrails: The Power of “Forbidden Moves”

Here’s a practical truth: At scale, the most important part of your BrandOS isn’t what it produces … It’s what it refuses to produce.

Forbidden moves are how you protect trust. They ensure you get more of what you want and less of what you don’t—especially when content is manufactured at volume.

Examples of forbidden moves (adapt these to your domain):

- No absolute certainty in probabilistic environments

- No hype language that undermines trust with sophisticated audiences

- No claims without proof standards (define what counts as proof)

- No manufactured intimacy that mimics a relationship you didn’t earn

- No tone drift that breaks your promise (snarky, overly casual, overly salesy—whatever is off-brand)

Guardrails aren’t constraints. They’re how you keep the system aligned with the asset you’re actually building: credibility.

Entropy Is Inevitable—So Detect It Early

The risk of outsourcing capability is that the tool changes. Models update. Distribution shifts. Channels fatigue. What worked last quarter can quietly stop working next month.

We’ve discussed this before, but almost everything decays or drifts over time. It’s important to be able to measure that. Here are two examples:

- Marketing drift: if open rates drop materially or engagement falls, something is drifting.

- Trading drift (high level): if risk-adjusted targets degrade, volatility exceeds targets, or outcomes start to look like luck rather than understanding, something is drifting.

No technique always works.

But something is always working.

The winners aren’t the ones who find a trick and freeze it. They’re the ones who build systems that notice change early, recalibrate, and keep moving forward.

The Real Choice

Your choice isn’t really whether or not to use AI. If you don’t, you’re going to get left behind.

AI will continue to make ‘real’ cheap; your BrandOS is how to keep your “meaning” valuable.

Your choice is whether you’ll let AI optimize you into generic engagement, and eventual irrelevancy … or whether you’ll build a BrandOS that protects what makes you you, while adapting fast enough to stay ahead of drift.