Technology is a massive differentiator in today's competitive landscape.

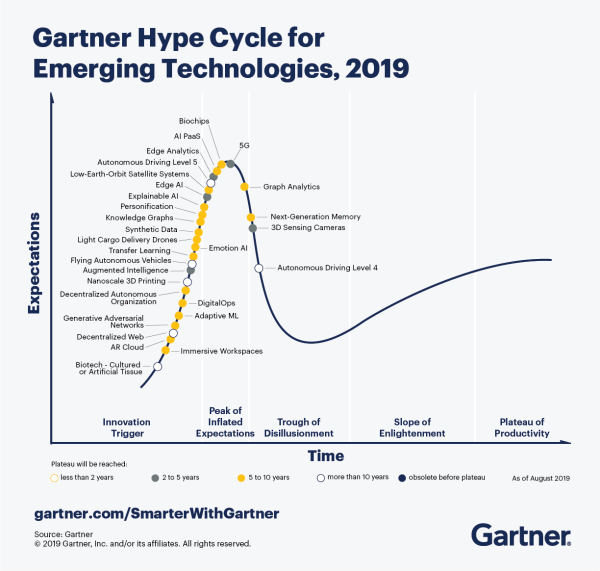

Sorting through predictions of which new technologies are going to impact the world and which are going to fizzle out can be an overwhelming task. I look forward to Gartner's report each year as a benchmark to compare reality against.

Last year, Gartner reported Deep Learning and Biochips were at the top of the hype cycle – in the "peak of inflated expectations." While I'm excited about both industries, there was certainly more buzz than actual improvement in those spaces last year. Excitement almost always exceeds realistic expectations when technologies gain mainstream appeal.

What's a "Hype Cycle"?

As technology advances, it is human nature to get excited about the possibilities and to get disappointed when those expectations aren't met.

At its core, the Hype Cycle tells us where in the product's timeline we are, and how long it will take the technology to hit maturity. It attempts to tell us which technologies will survive the hype and have the potential to become a part of our daily life.

Gartner's Hype Cycle Report is a considered analysis of market excitement, maturity, and the benefit of various technologies. It aggregates data and distills more than 2,000 technologies into a succinct and contextually understandable snapshot of where various emerging technologies sit in their hype cycle.

Here are the five regions of Gartner's Hype Cycle framework:

- Innovation Trigger (potential technology breakthrough kicks off),

- Peak of Inflated Expectations (Success stories through early publicity),

- Trough of Disillusionment (waning interest),

- Slope of Enlightenment (2nd & 3rd generation products appear), and

- Plateau of Productivity (Mainstream adoption starts).

Understanding this hype cycle framework enables you to ask important questions like "How will these technologies impact my business?" and "Which technologies can I trust to stay relevant in 5 years?"

That being said – it's worth acknowledging that the hype cycle can't predict which technologies will survive the trough of disillusionment and which ones will fade into obscurity.

What's exciting this year?

It's worth noting that in this edition of the hype cycle, Gartner shifted towards introducing new technologies at the expense of technologies that would normally persist through multiple iterations of the cycle; 21 new technologies were added to the list. For comparison, here's my article from last year, and here's my article from 2015. Click on the chart below to see a larger version of this year's Hype Cycle.

via Gartner

via Gartner

This year's ~30 key technologies were selected from over 2000 technologies and bucketed into 5 major trends:

- Sensing and Mobility represents technologies that are gaining more detailed awareness of the world around them like 3D sensing cameras, the next iteration of autonomous driving, and drones. Improvements in sensor technology and their communication through the IoT is leading to more data and more insight.

- Augmented Human builds on the "Do It Yourself Biohacking" trend from last year. It represents technologies that improve both the cognitive and physical abilities of humanity – technologies like biochips, augmented intelligence and robotic skin. The future is bringing implants to extend humans past their perceived limits and increase our understanding of our bodies; biochips with the potential to detect diseases, synthetic muscles, and neural implants. Many of my friends believe this realm will elongate human lifespans.

- Postclassical Compute and Comms represents new architectures of classical computing technologies like 5G or nanotech – it results in faster CPUs, denser memory and increased throughput. Innovation is commonly thought of as new technologies, but better versions of existing technologies can provide just as much value – and disrupt industries in a very similar way.

- Digital Ecosystems are platforms that connect various types of "actors." They create seamless communication between companies, people and APIs. This enables more efficient decentralized organizations (and decentralized autonomous organizations) and allows constant adoption of new evolutions in technology. Examples of this technology are the decentralized web, synthetic data, and decentralized autonomous organizations.

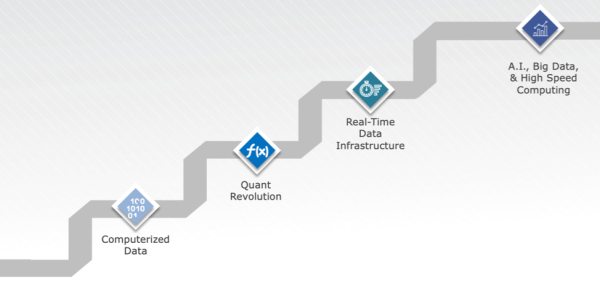

- My wheelhouse, Advanced AI and Analytics is an acknowledgment of new classes of algorithms and data science that are leading to new capabilities, deeper insights, and adaptive AI. The future of this space involves more accurate predictions and recommendations on smaller data sets. More signal. Less noise.

Looking past the overarching trends of this year, it's also fun to look at what technologies are just starting their hype cycle.

- Artificial Tissue (Biotech) could be used to repair or replace portions of, or whole, tissues(cartilage, skin, muscle, etc.)

- Flying Autonomous Vehicles can be used as taxis, but also as transports for other things such as medical supplies, food delivery, etc. Amazon and Uber are likely excited about this development – and expect it in the next couple of years.

- Decentralized Web builds on the same arguments blockchain creates against normal currencies. Because the mainstream centralized web is dominated by massive and corporate-controlled platforms like Facebook and Google, the decentralized web movement strives to enable free speech and increased access to those users whose access to the internet is strictly regulated.

- Transfer Learning refers to the ability of an AI to solve one problem and apply that "lesson" to a different but tangential problem. When AI becomes able to generalize knowledge more abstractly, you will see a massive spike in utilization.

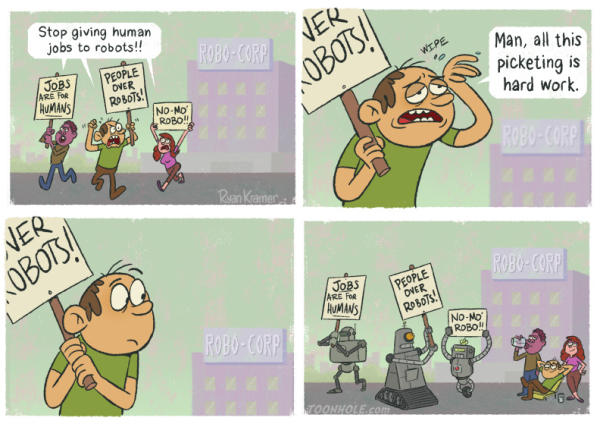

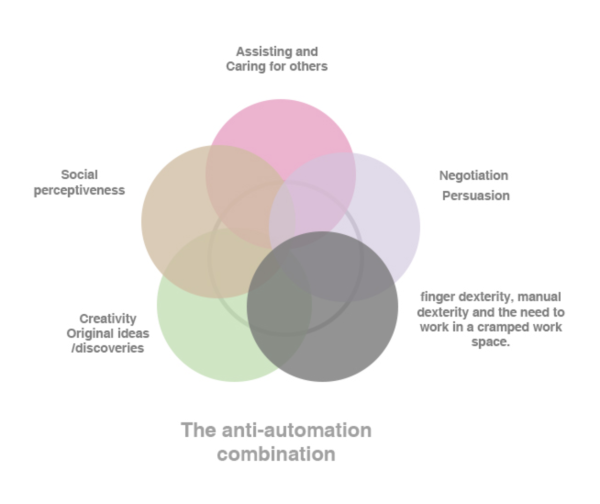

- Augmented Intelligence complements humanity instead of replacing them with robots. To be clear – Augmented intelligence is a subset of AI, but a different perspective/approach to its adoption.

AI has been around since the '60s, but technological advancement and increased data mean we are now in an AI spring after decades of stagnation.

Many of these technologies have been hyped for years – but the hype cycle is different than the adoption cycle. We often overestimate a year and underestimate 10.

Which technologies do you think will survive the hype?