Every year, Stanford puts out an AI Index with a massive amount of data attempting to sum up the current state of AI.

It's 190 pages that detail where research is going and covers current specs, ethics, policy, and more.

It is super nerdy … yet, it's probably worth a skim.

Here are a few things that caught my eye and might help set some high-level context for you.

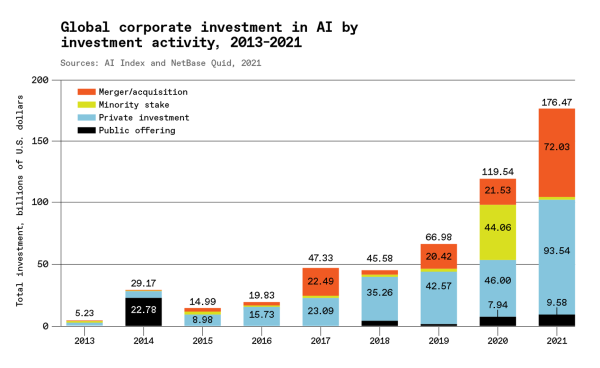

Investments In AI

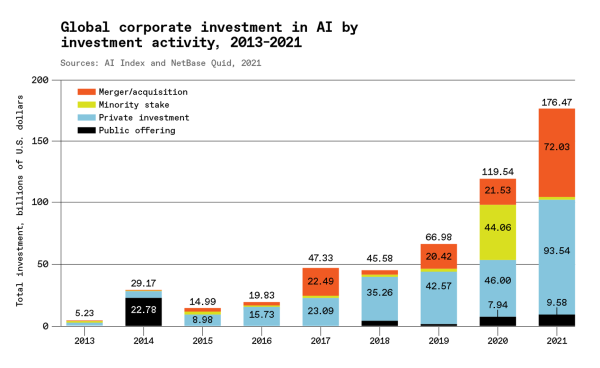

via AI Index 2022

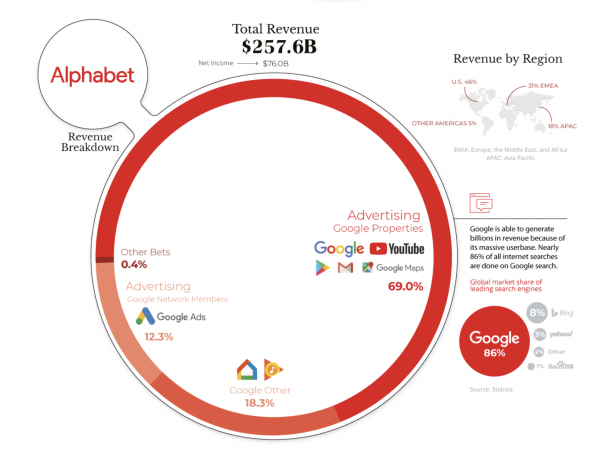

In 2021, private investments in AI totaled over $93 billion – which was double the investments made in 2020. However, fewer companies received investments. The number of companies receiving funding dropped from 1051 in 2019 to 746 in 2021.

At extremes, putting greater resources in fewer hands increases the danger of monopolies. But we are early in the game, and it is safe to interpret this consolidation as separating the wheat from the chaff. As these companies become more mature, you're seeing a drop-off similar to when the web began its exponential growth.

With investment increasing, and the number of companies consolidating, you can expect to see massive improvements in the state of AI over the next few years.

We knew that already – but following the money is a great way to identify a trend.

Increased regulation is another trend you should expect as AI matures and proliferates.

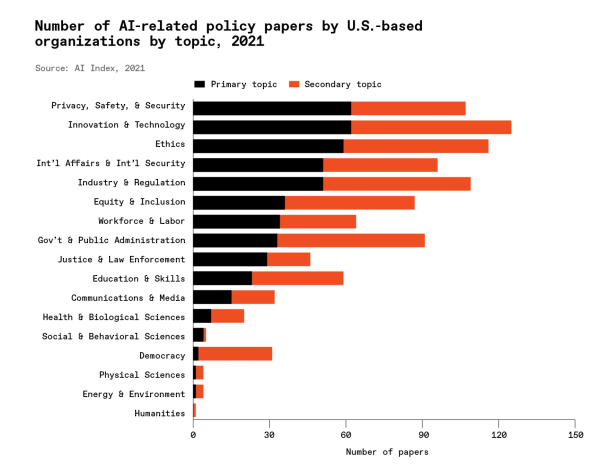

Ethical AI

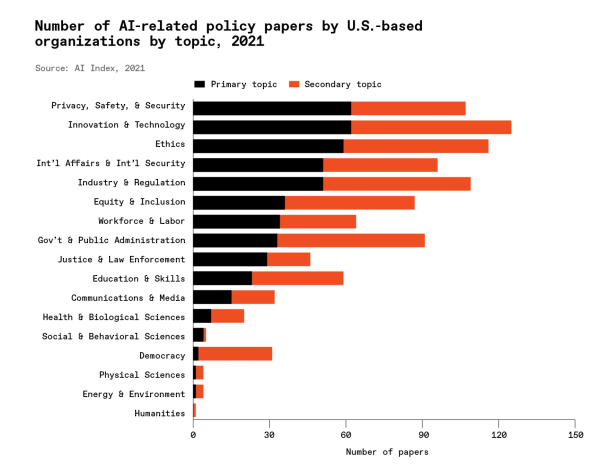

via AI Index 2022

Research on the ethics of AI is becoming much more widespread – while the research influences papers, it is also a catalyst for new laws.

AI's academic and philosophical implications are being taken much more seriously across the board. Many people recognize that AI has the potential to impact the world in unprecedented ways. As a result, its promise and peril are under constant scrutiny.

The adoption of AI might seem slow … but like electricity (or the internet), it only seems slow until it's suddenly ubiquitous.

As you find AI in more domains, the ethics of its use becomes a more pressing concern. There is a lot of potential for abuse of technologies like facial recognition and deepfakes. Likewise, people worry about mistakes, judgment, and who's liable for errors in technologies like self-driving cars.

Luckily, you have many of the world's greatest minds working on the subject – including the Hastings Center.

Many factors contribute to the speed of AI's maturation and adoption. Here are three of the obvious reasons. First, hardware and software are getting better. Second, we have access to more and better data than ever before. And third, more people are actively seeking to leverage these capabilities for their benefit.

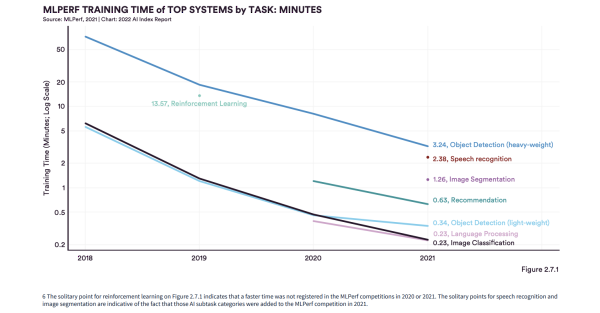

Technical Improvements

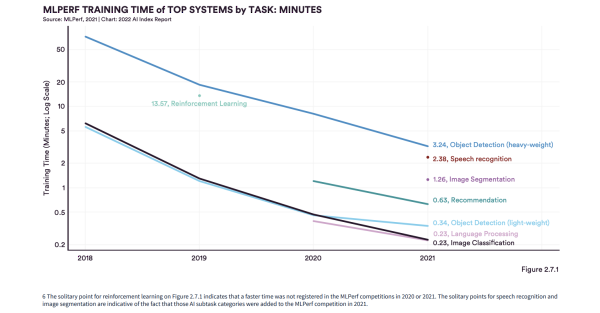

via AI Index 2022

Top-performing hardware systems can reach baseline levels of performance in task categories like recommendation, light-weight objection detection, image classification, and language processing in under a minute.

Not only that, but the cost to train systems is also decreasing. By one measure, training costs for image classification systems have dropped by a factor of 223 since 2017.

When people think of advancements in AI, they often think of the humanization of technology. While that may eventually happen, most of the progress in AI comes from more practical improvements and applications. Think of these as discrete capabilities (like individual Lego blocks) that help you do something better than before. These capabilities are easily stacked to create prototypes that do more. Prototypes mature into products when the capabilities are robust and reliable enough to allow new users to achieve desired results. The next stage happens when the capabilities mature to the point that people use them as the foundation or platform to do a whole new class of things.

We're past the trough of disillusionment and are on the slope to enlightenment.

Practical use cases abound. Meaning, these technologies aren't only for giant companies anymore.

AI is ready for you to use.

If I think of a seasonal metaphor, it is "springtime" for AI (a time of rapid growth). But not for you unless you plant the seeds, water them, and start to build your capabilities to understand and use what sprouts.

As a reminder, it isn't really about the AI … it is about understanding the results you want, the competitive advantages you need, and the data you're feeding it (or getting from it) so that you know whether something is working.

You've probably heard the phrase "garbage-in-garbage-out." This is especially true with AI. Top results across technical benchmarks have increasingly relied on extra training data for combinatorial and dimensional reasons. Another reason this is important is to compound insights to continue learning and growing. As of 2021, 9 state-of-the-art AI systems out of the 10 benchmarks in this report are trained with extra data.

To read more of my thoughts about these topics, you can check out this article on data and this article on alternative datasets.

Conclusion

Artificial Intelligence capabilities are becoming much more robust and more able to transfer their learnings to new domains. They're taking in broader data sets and producing better results (while taking less investment to do so).

It isn't a question of "If" … it is a question of "when."

AI is exciting and inevitable!

Let me know if you have questions or comments.

via

via