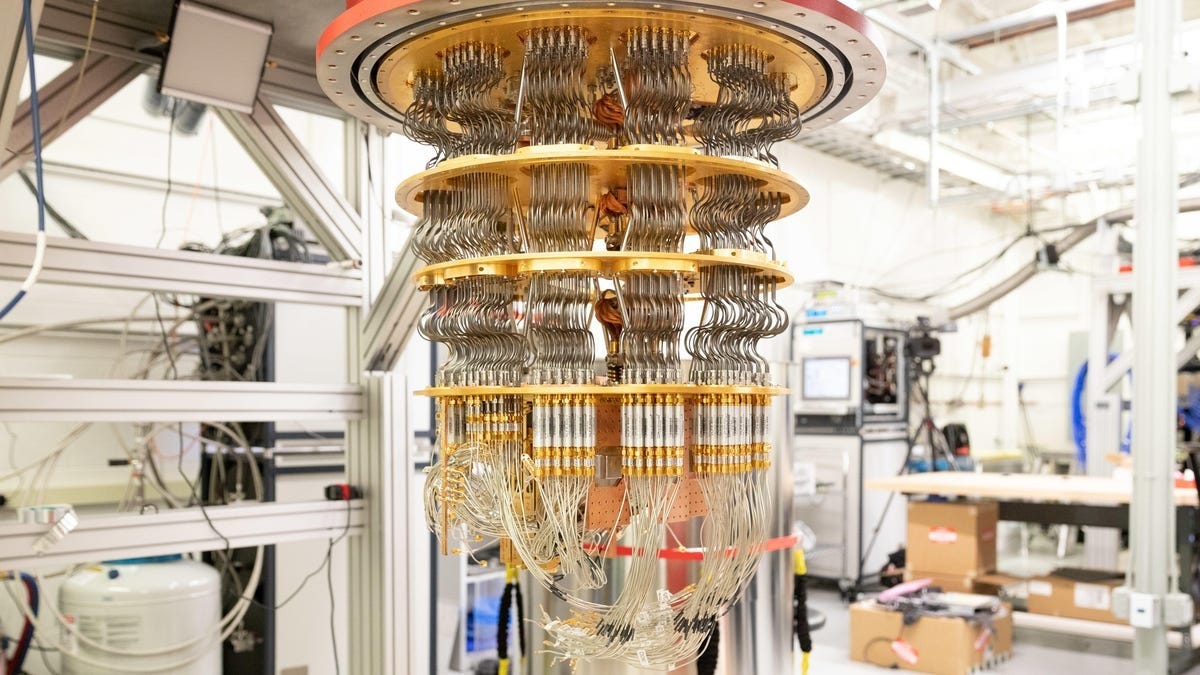

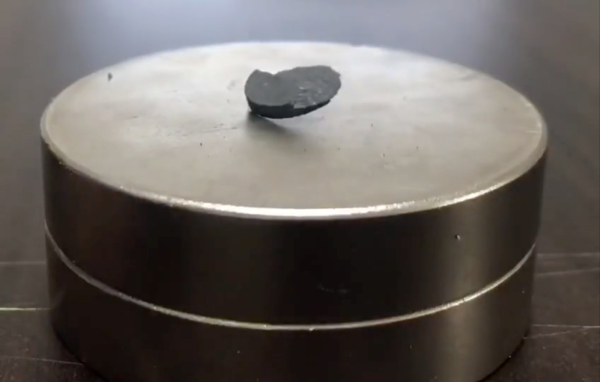

Last week, I talked about the potential for room-temperature superconductors.

In that discussion, I noted that we are now in the 4th Industrial Revolution, in part because of better and more connected chips (semiconductors).

I want to dive back into Industrial Revolutions because we're at an inflection point in AI and chips.

A Look at Industrial Revolutions

The Industrial Revolution has two phases: one material, the other social; one concerning the making of things, the other concerning the making of men. - Charles A. Beard

There are several turning points in our history where the world changed forever. Former paradigms and realities became relics of a bygone era.

Tomorrow's workforce will require different skills and face different challenges than we do today. You can consider this the Fourth Industrial Revolution. Compare today's changes to our previous industrial revolutions.

- First industrial revolution - Discovery of the steam engine and creation of factories

- Second industrial revolution - Introduction of the assembly line and mass production

- Third industrial revolution - The world wide web and computers connect the world, enabling the digital age.

Each revolution shared multiple similarities. They were disruptive. They were centered on technological innovation. They created concatenating socio-cultural impacts.

Since most of us remember the third revolution, let's spend some time on that.

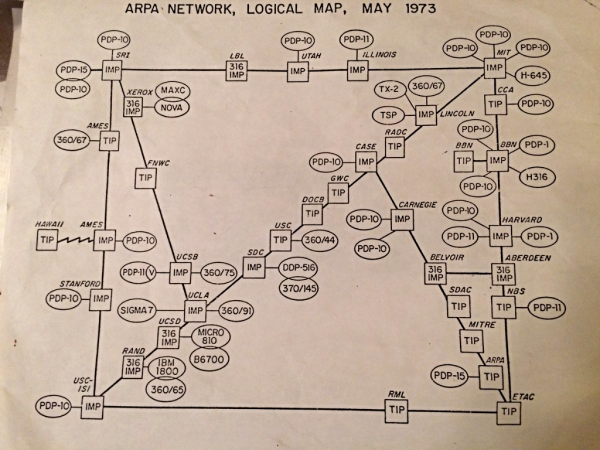

Here's a map of the entire "internet" in 1973.

Reddit via @WorkerGnome.

Most of us didn't use the internet at this point, but you probably remember Web1 (static HTML pages, a 5-minute download to view a 3Mb picture, and of course … waiting for a website to load over the dialup connection before you could read it). It was still amazing!

Then, Web 2.0 came, and so did everything we now associate with the internet; Facebook, YouTube, ubiquitous porn sites, and Google. But, with Web 2.0 also came user tracking and advertising, which meant that we became the "product." Remember, you're not the customers of those platforms – advertisers are. And if you're not the customer, you're the product. And when you're not the customer, there's no reason for the platforms not to censor what you see, hear, or experience to control the narrative.

Now we're seeing a focus on the Blockchain, and its reliant technologies, with Web3.

Where we are and where we are going

I believe that, if managed well, the Fourth Industrial Revolution can bring a new cultural renaissance, which will make us feel part of something much larger than ourselves: a true global civilization. I believe the changes that will sweep through society can provide a more inclusive, sustainable and harmonious society. But it will not come easily. – Klaus Schwab

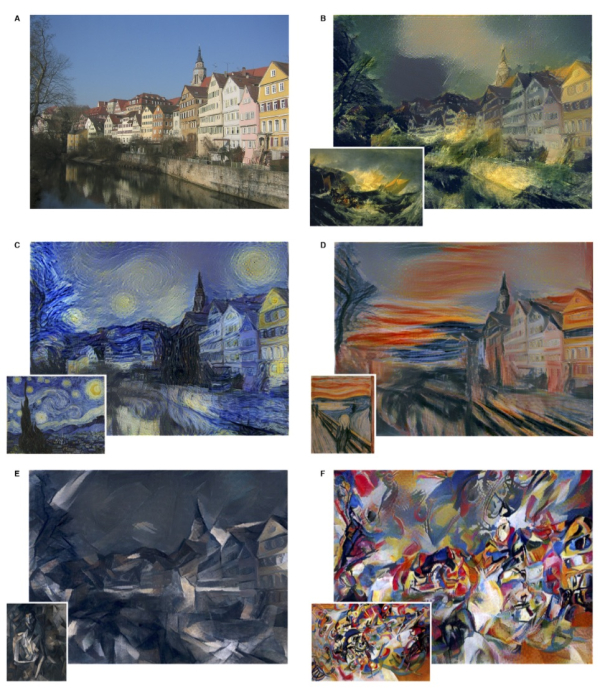

With Web3, A.I., better chips, and more, we're at the apex of another inflection point. As a result, the game is changing, as are the rules, the players, and what it means to win.

At significant transition points, it is easy to see fear, resistance, and a push to keep things the same. Yet, time marches on. Much of the pain felt during these transitions occurs because people hesitate to adapt. As a result, the wave crashes on them instead of them riding it to safety.

Robots can do many things, but they've yet to match humanity's creativity and emotional insight. As automation spreads to more jobs, the need for management, creativity, and decision-making won't go anywhere … data and analytics might augment them, but they won't disappear.

Our uniqueness and flexibility rightly protect our usefulness. AI and automation free us up to be our best selves and to explore new possibilities.

All of these changes bring about a decentralization of power – and a new set of freedoms for people – including the ability to discover and adopt capabilities in less time and with less effort. But, to bring it back to my skepticism again, there are a lot of roadblocks, interferences, and time between now and the consumer being in control again.

We can shorten that distance, though. This reminds me of a quote by Elon Musk:

Stop being patient and start asking yourself, how do I accomplish my 10 year plan in 6 months? You will probably fail but you will be a lot further ahead than the person who simply accepted it was going to take 10 years."

One of an entrepreneur's most powerful capabilities is the ability to shorten time – and get more done than others thought possible.

Onwards!

Hyun-Tak Kim—ScienceCast via

Hyun-Tak Kim—ScienceCast via