“Nobody phrases it this way, but I think that artificial intelligence is almost a humanities discipline. It's really an attempt to understand human intelligence and human cognition.” —Sebastian Thrun

We often use human consciousness as the ultimate benchmark for artificial exploration.

The human brain is ridiculously intricate. While weighing only three pounds, it contains about 100 billion neurons and 100 trillion connections between them. On top of the sheer complexity, the order of the connections and the order of actions the brain does naturally make it even harder to replicate. The human brain is also constantly reorganizing and adapting. It's a beautiful piece of machinery.

We've had millions of years for this powerhouse of a computer to be created, and now we're trying to do the same with neural networks and machines in a truncated time period. While deep learning algorithms have been around for a while, we're just now developing enough data and computing power to change deep learning from a thought experiment to a real edge.

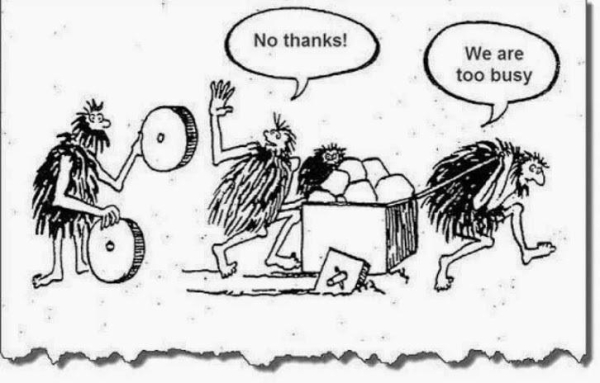

Think of it this way, when talking about the human brain, we talk about left-brain and right-brain. The theory is that left-brain activities are analytical and methodical, and right-brain activities are creative, free-form, and artistic. We're great at training AI for left-brain activities (obviously with exceptions). In fact, AI is beating us at these left-brain activities because a computer has a much higher input bandwidth than we do, they're less biased, and they can perform 10,000 hours of research by the time you finish this article.

It's tougher to train AI for right-brain tasks. That's where deep learning comes in.

Deep learning is a subset of machine learning based on unsupervised learning from unstructured/unlabeled data. Instead of asking AI a question, giving it metrics, and letting it chug away, you're letting AI be intuitive. Deep learning is a much more faithful representation of the human brain. It utilizes a hierarchy of convolutional neural networks to handle linear and non-linear operations so it can think creatively to better problem-solve on potentially various data sets and in unseen environments.

When a baby is first learning to walk, it might stand up and fall down. It might then take a small stutter step, or maybe a step that's much too far for its little baby body to handle. It will fall, fail, and learn. Fall, fail, and learn. That's very similar to the goal of deep learning or reinforcement learning.

What's missing is the intrinsic reward that keeps humans moving when the extrinsic rewards aren't coming fast enough. AI can beat humans at many games but has struggled with puzzle/platformers because there's not always a clear objective outside of clearing the level.

A relatively new (in practice, not in theory) approach is to train AI around "curiosity"[1]. Curiosity helps it overcome that boundary. Curiosity lets humans explore and learn for vast periods of time with no reward in sight, and it looks like it can do that for computers too!

Soon, I expect to see AI learn to forgive and forget, be altruistic, follow and break rules, learn to resolve disputes, and even value something that resembles "love" to us.

Exciting stuff!

_______

[1] – Yuri Burda, Harri Edwards, Deepak Pathak, Amos Storkey, Trevor Darrell and Alexei A. Efros. Large-Scale Study of Curiosity-Driven Learning

In ICLR 2019.

via

via  via

via

via

via