In 2016, I received this e-mail from my oldest son, who used to be a cybersecurity professional.

Date: Saturday, October 22, 2016 at 7:09 PM

To: Howard Getson

Subject: FYI: Security StuffFYI – I just got an alert that my email address and my Gmail password were available to be purchased online.

I only use that password for my email, and I have 2-factor enabled, so I'm fine. Though this is further proof that just about everything is hacked and available online.

If you don't have two-factor enabled on your accounts, you really need to do it.

Since then, security has only become a more significant issue. I wrote about the Equifax event, but there are countless examples of similar events (and yes, I mean countless).

When people think of hacking, they often think of a Distributed Denial of Service (DDOS) attack or the media representation of people breaking into your system in a heist.

In reality, the most significant weakness is people; it's you … the user. It's the user that turns off automatic patch updating. It's the user that uses thumb drives. It's the user that reuses the same passwords. But, even if you do everything right, you're not always safe.

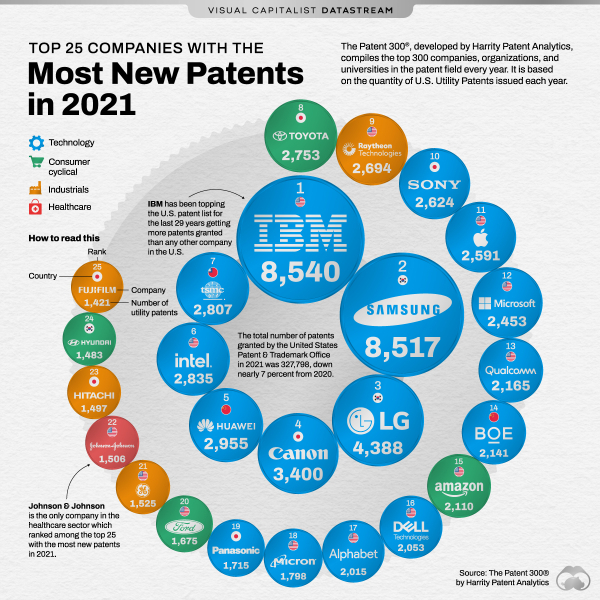

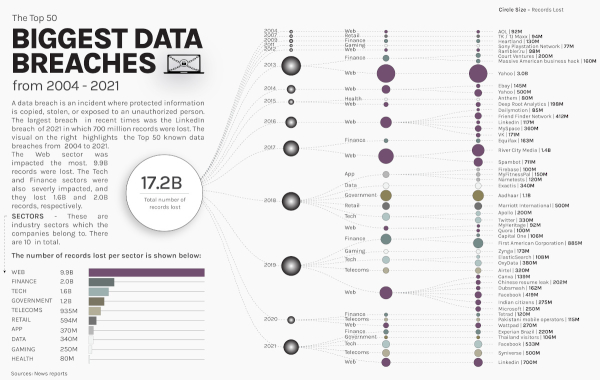

Your data is likely stored in dozens of places online. You hope your information is encrypted, but even that isn't always enough. Over the last 17 years, 17.2B records have been "lost" by various companies. In 2021, a new record was set with 5.9 billion user records stolen.

VisualCapitalist put together a visualization of the 50 biggest breaches since 2004.

Click To See Full Size via VisualCapitalist

Click To See Full Size via VisualCapitalist

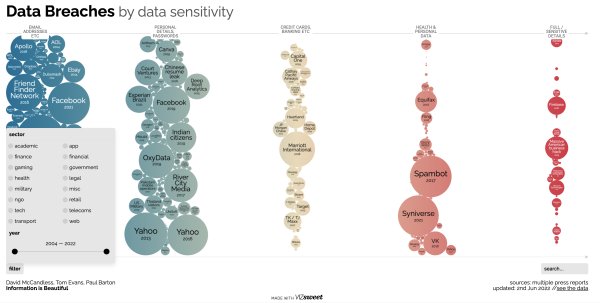

InformationisBeautiful also put together a great interactive visualization with all of the breaches, if you want to do more research.

Click To See Interactive Version via InformationIsBeautiful

It's impossible to protect yourself completely, but there are many simple things you can likely do better.

- Use better passwords… Even better, don't even know them. You can't disclose what you don't know. Consequently, I recommend a password manager like LastPass or 1Password, which can also suggest complex passwords for you.

- Check if any of your information has been stolen via a website like HaveIBeenPwned or F-Secure

- Keep all of your software up to date (to avoid extra vulnerabilities)

- Don't use public Wi-Fi if you can help it (and use a VPN if you can't)

- Have a firewall on your computer and a backup of all your important data

- Never share your personal information on an e-mail or a call that you did not initiate – if they legitimately need your information, you can call them back

- Don't trust strangers on the internet (no, a Nigerian Prince does not want to send you money)

- Hire a third-party security company like eSentire or Pegasus Technology Solutions to help monitor and protect your corporate systems

How many cybersecurity measures you take comes down to two simple questions … First, how much pain and hassle are you willing to deal with to protect your data? And second, how much pain is a hacker willing to go through to get to your data?

My son always says, "you've already been hacked … but have you been targeted?" Something to think about!

via

via  via

via