It's no surprise that there is often a disparity between what experts believe and what the average adult feels. It's even more pronounced in industries like AI that have been lambasted by science fiction and popular media.

Even just a few years ago, many of my advisors and friends told me to avoid using the term "AI" in our materials because they thought people would respond negatively to it. Back then, people expected AI to be artificial and clunky … yet, somehow, it also reminded them of dystopian stories about AI Overlords and Terminators. An incompetent superpower is scary … so is a competent superpower you can't trust!

As AI integrates more heavily into our everyday lives, people's hopes and concerns are intensifying… but should they be?

Pew Research Center surveyed over 5,000 adults and 1,000 experts about their concerns related to AI. The infographic shows the difference in concern those groups had regarding specific issues.

Statista via VisualCapitalist

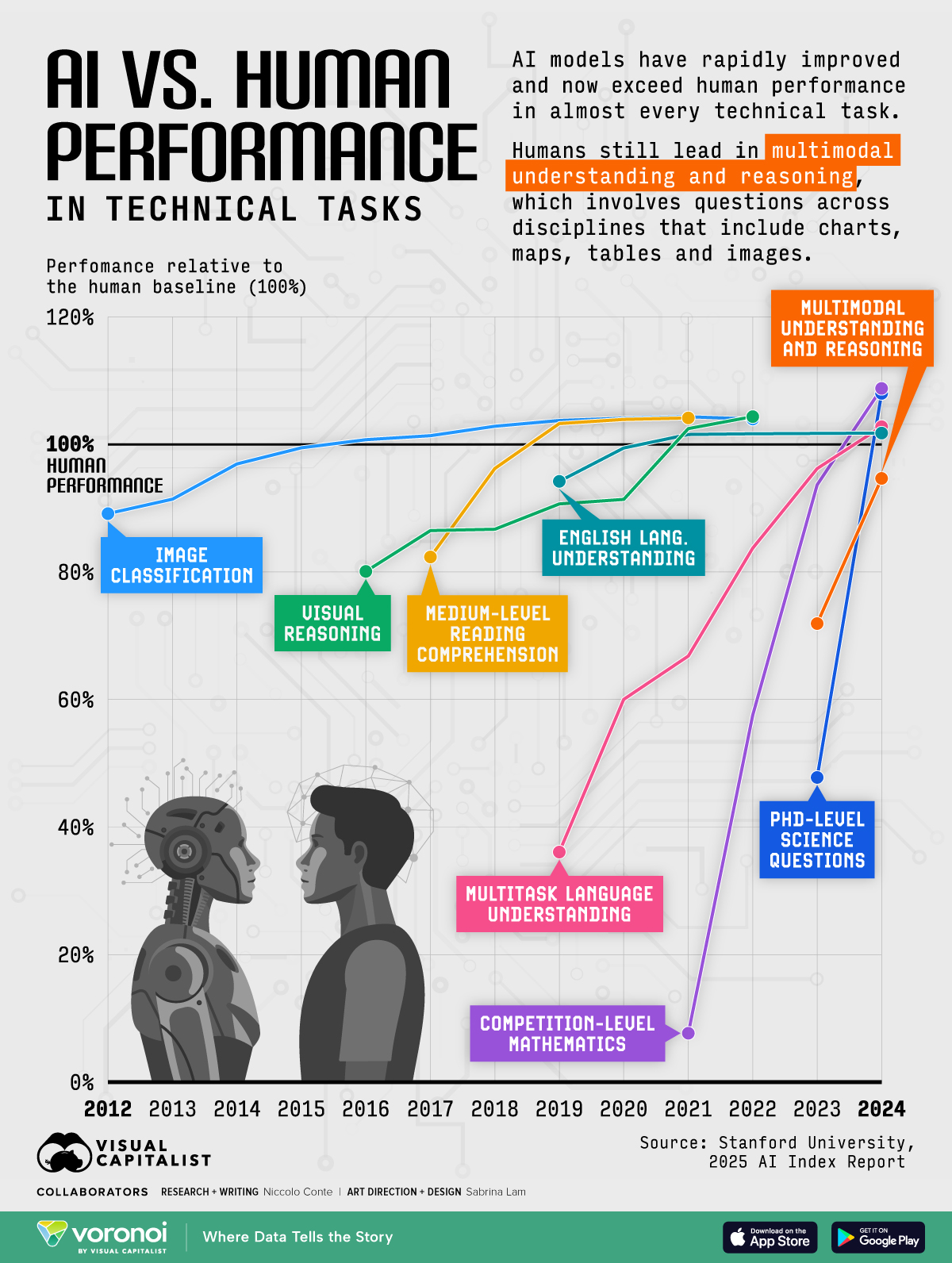

Half of experts (47%) report being more excited than concerned about AI’s future. Among U.S. adults, just 11% say the same.

Instead, 51% of adults say they’re more concerned than excited — more than triple the rate of experts (15%).

The most common—and well-founded—fears center on misinformation and the misappropriation of information. Experts and the average adult are in alignment here.

I am consistently surprised by the lack of media literacy and skepticism demonstrated by otherwise intelligent people. Images and articles that scream "fake" or "AI" to me are shared virally and used to not only take advantage of the most susceptible but also to create dangerous echo chambers.

Remember how bad phishing e-mails used to be, and how many of our elderly or disabled ended up giving money to a fake Prince from various random countries? Even my mother, an Ivy League-educated lawyer, couldn't help but click on some of these e-mails. Meanwhile, the quality of these attacks has risen exponentially.

And we're seeing the same thing now with AI. Not only are people falling for images, videos, and audio, but you also have the potential for custom apps and AI avatars that are fully focused on exploitation.

AI Adoption Implications

Experts and the average adult have a significant disparity in beliefs about the long-term ramifications of AI adoption, such as potential isolation or job displacement.

I'm curious, how concerned are you that AI will lead to fewer connections between people or job loss?

I often say that technology adoption has very little to do with technology and much more to do with human nature.

That obviously includes AI adoption as well.

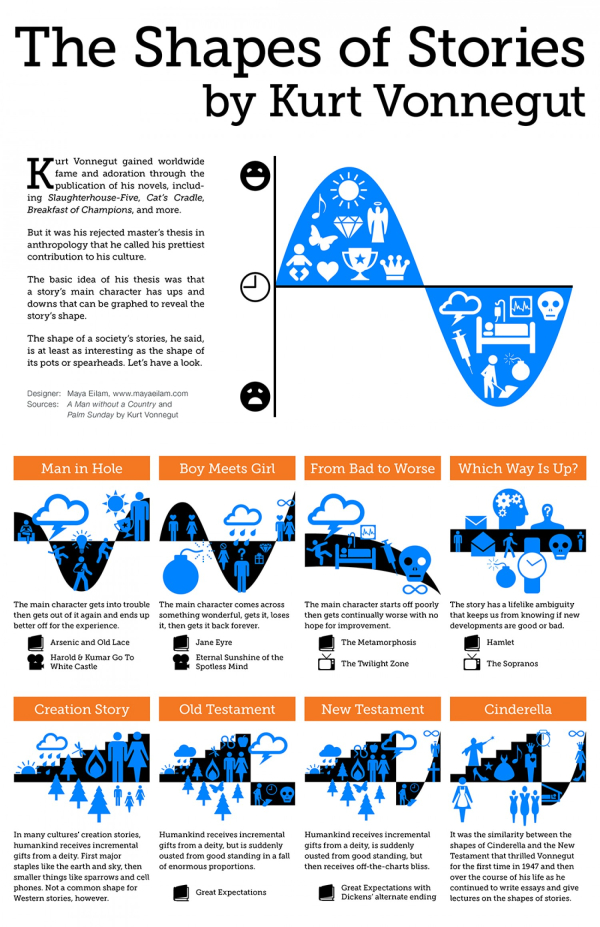

Career growth often means abandoning an old role to take on something new and better. It's about delegating, outsourcing, or automating tasks so you can free up time to work on things that matter more.

It may sound like a joke, but I don't believe most people will lose jobs to AI. Instead, they'll lose jobs to people who use AI better. The future of work will be about amplifying human intelligence … making better decisions, and taking smarter actions. If your job is about doing those things – and you don't use AI to do them – you will fall behind, and there will be consequences.

It's the same way that technology overtook farming. Technology didn't put people out of work, but it did force people to work differently.

Innovation has always created opportunity and prosperity in the long term. Jobs may look different, and some roles may be phased out, but new jobs will take their place. Think of it as tasks being automated, not jobs.

Likewise, COVID is not why people have resisted returning to the office. COVID might have allowed them to work remotely in the first place, but their decision to resist going back to the office is a natural part of human nature.

When people found that technology enabled them to meet expectations without a commute, opportunities and possibilities expanded.

Some used the extra time to learn and grow, raising their expectations. Others used that time to rest or focus on other things. They're both choices, just with different consequences.

Choosing to Contract or Expand in the Age of AI

AI presents us with a similar inflection point. I could have easily used AI to write this article much faster, and it certainly would have been easier in the short term. But what are the consequences of that choice?

While outreach and engagement are important, the primary benefit of writing a piece like this, for me, is to take the time and to go through the exercise of thinking about these issues … what they mean, what they make possible, and how that impacts my sense of the future. That wouldn't happen if I didn't do it.

I often say, "First bring order to chaos … then wisdom comes from making finer distinctions." Doing work often entails embracing the chaos and making finer distinctions over time as you gain experience. With repetition, the quality of those results improves. As we increasingly rely on technology to do the work, to learn, and to grow, the technology learns and grows. If you fail to also learn and grow, it's not the technology's fault. It is a missed opportunity.

The same is true for connection. AI can help you connect better with yourself and others… or it can be another excuse to avoid connection.

You can now use an AI transcription service to record every word of an interaction, take notes, create a summary, and even highlight key insights. That sounds amazing! But far too many people become accustomed to the quality of that output and fail to think critically, make connections, or even read and process the information.

It could be argued that our society already has a connection problem (or an isolation epidemic), regardless of AI. Whether you blame it on social media, remote work, or COVID-19, for a long time, how we connect (and what we consider "connection") has been changing. However, many still have fulfilling lives despite the technology … again, it's a choice. Do you use these vehicles to amplify your life, or are they a substitute and an excuse to justify failing to pursue connection in the real world?.

As said, actions have consequences … and so do inactions.

I'm curious to hear your thoughts on these issues. Are you focused on the promise or the perils of AI?

via

via