Man acts as though he were the shaper and master of language, while in fact language remains the master of man. – Martin Heidegger

Words are powerful. They can be used to define, obscure, or even to create reality. They can be taken alone, as precise definitions, or they can be part of a broader spectrum or scale. As such, they can create or destroy … uplift or demoralize. Their power is seemingly limitless.

Language is like a hammer … you can use it to create or destroy something. Although it evolved to aid social interactions and facilitate our understanding of the world, it can also constrain how we perceive it and limit our grasp of technological advances and possibilities.

Before I go into where language fails us, it’s essential to understand why language is so important.

Language Facilitates Our Growth

Because without our language, we have lost ourselves. Who are we without our words? – Melina Marchetta

Language is one of the master keys to advanced thought. As infants, we learn by observing our environment, reading facial expressions and body language, and reflecting on our perceptions. As we improve our understanding and use of language, our brains and cognitive capabilities develop more rapidly.

It’s this ability to cooperate and share expertise that has allowed us to build complex societies and advance technologically. However, as exponential technologies accelerate our progress, language itself may seem increasingly inadequate for the tasks at hand.

What happens when we don’t have a word for something?

The limits of my language mean the limits of my world – Ludwig Wittgenstein

English is famous for coopting words from other languages; there are many cases of languages having nuanced words that you can’t express well in other languages.

- Schadenfreude – German for pleasure derived by someone from another person’s misfortune.

- Layogenic – Tagalog for someone who looks good from afar but appears less attractive as you see the person closer

- Koi No Yokan – Japanese for the sense upon first meeting a person that the two of you are going to fall in love

Expressing new concepts opens up our minds to new areas of inquiry. In the same vein, the lack of an appropriate concept or word often limits our understanding.

Wisdom comes from finer distinctions … but sometimes we don’t have words for those distinctions. Here are two examples.

- An artist who has studied extensively for many years can somehow “know” that a work is a fake without being able to explain why.

- A professional athlete can better recognize the potential in an amateur than a bystander.

How is that possible?

They’re subconsciously recognizing and evaluating factors that others couldn’t assess consciously.

Language as a Limitation

When it comes to atoms, language can be used only as in poetry. The poet, too, is not nearly so concerned with describing facts as with creating images. -Niels Bohr

In Buddhism, there’s the idea of an Ultimate Reality and a Conventional Reality. Ultimate Reality refers to the objective nature of something, while the Conventional Reality is tied inextricably to our thought processes, and is heavily influenced by our choice of language.

Said differently, language is one of the most important factors in determining what you focus on, what you make it mean, and even what you choose to do. Ultimately, language conveys cultural and personal values and biases, and influences how we perceive “reality”.

This is part of the challenge we have with AI systems. They have incredible power to shape our exposure to language and thought patterns. Consequently, it gives the platform significant power to shape its audience’s thoughts and perceptions. We talked about this in last week’s article. We’ll dive deeper in the future.

To paraphrase philosopher David Hume, our perception of the world is drawn from ideas and impressions. Ideas can only ever be derived from our impressions through a process that often leads us to contradictions and logical fallacies.

Instead of exploring the true nature of things or thinking abstractly, language sifts and categorizes experiences according to our prior heuristics. When you’re concerned about survival, those heuristics save you a lot of energy; when you’re trying to expand the breadth and depth of humanity’s capabilities, they’re potentially a hindrance.

The world around us is changing faster than ever, and complexity is increasing exponentially. It will only get harder to describe the variety and magnificence of existence with our lexicon … so why try?

We personify the world around us, and it limits our creativity.

Many of humanity’s greatest inventions came from skepticism, abstractions, and disassociations from norms.

A mind enclosed in language is in prison. – Simone Weil

What could we create if we let go of language and our intertwined belief systems?

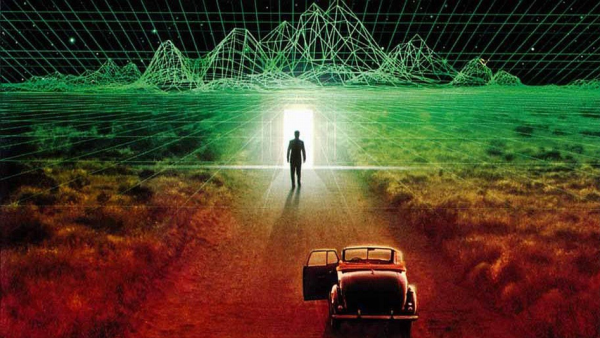

There has recently been a lot of press in which AI experts are saying that the next big jump in AI won’t come from large language models but from world models of intelligence.

Likewise, AI consciousness and superintelligence have become more common topics of discussion and speculation.

When will AI have human-like consciousness?

I will try to answer that, but first, I want to deconstruct the idea a bit. The question itself makes assumptions based on how humans tend to personify things and rely on past patterns to evaluate what’s in front of us.

Said differently, I’m not sure we want AI to think the way humans do. I think we want to make better decisions, take smarter actions, and improve performance. And that means thinking better than humans do.

Back to the original question, I think the term “consciousness” is likely a misnomer, too.

What is consciousness, and what makes us think that for technology to surpass us, it needs it? The idea that AI will eventually have a “consciousness” may be a symptom of our own linguistic biases.

Artificial consciousness may not be anything like human consciousness in the same way that alien lifeforms may not be carbon-based. An advanced AI could solve problems that even the brightest humans cannot. However, being made of silicon or graphene, it may not have a conscious experience. Even if it did, it likely wouldn’t feel emotions (like shame, or greed) … at least the way we describe them.

Meanwhile, it seems like we pass some new hallmark of consciousness exhibited by increasingly sophisticated AIs every day. They even have their own AI-only social media network now.

Humans Are The Real Black Box

But if thought corrupts language, language can also corrupt thought – George Orwell

Humans are nuanced and surprisingly non-rational creatures. We’re prone to cognitive biases, fear, greed, and discretionary mistakes. We create heuristics from prior experiences (even when it does not serve us), and we can’t process information as cleanly or efficiently as a computer. We unfailingly search for meaning, even where there often isn’t any. Though flawed, we’re perfect in our imperfections.

When scientists use expensive brain-scanning machines, they can’t make sense of what they see. When humans give explanations for their own behavior, they’re often inaccurate – more like retrospective rationalizations or confabulations than summaries of the complex computer that is the human brain.

When I first wrote on this subject, I described Artificial Intelligence as programmed, precise, and predictable. At the time, AI was heavily influenced by the data fed into it and the programming of the human who created it. In a way, that meant AI was transparent, even if the logic was opaque.

Today, AI can exhibit emergent capabilities, such as complex reasoning, in-context learning, and abstraction, that were not explicitly programmed by humans. These behaviors can be impressive and highly useful. They are beginning to extend far beyond what the original developers explicitly designed or anticipated (which is why we’re discussing user-sovereign systems versus institutional systems).

In short, we don’t just need to understand how AI was built; we need frameworks for understanding how it acts in diverse contexts. If an AI system behaves consistently with its design goals, performs safely, and produces reliable results, then our trust in it can be justified even if we don’t have perfect insight into every aspect of its internal reasoning — but that trust should be based on rigorous evaluation, interpretability efforts, and awareness of limitations.

Do you agree? Reach out and tell me what you think.