In last week's article on Stanford's AI Index, we broadly covered many subjects.

There's one I felt like covering in more depth. It's the concept of AI Agents.

One way to improve AI is to create agentic AI systems capable of autonomous operation in specific environments. However, agentic AI has long challenged computer scientists. The technology is only just now starting to show promise. Current agents can play complex games, like Minecraft, and are much better at tackling real-world tasks like research assistance and retail shopping.

A common discussion point is the future of work. The concept deals with how automation and AI will redefine the workforce, the workday, and even what we consider to be work.

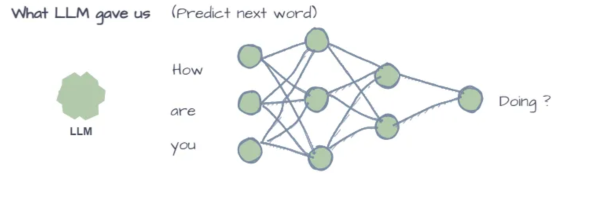

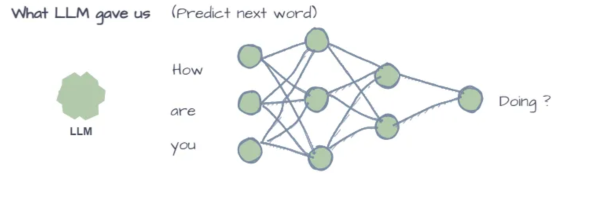

Up until now, AI has been in very narrow applications. Powerful applications, but with limited breadth of scope. Generative AI and LLMs have increased the variety of tasks we can use AI for, but that's only the beginning.

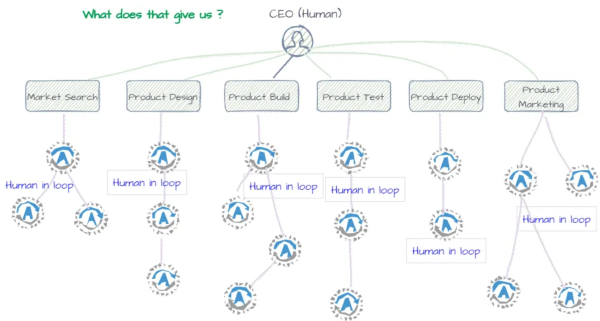

via Aniket Hingane

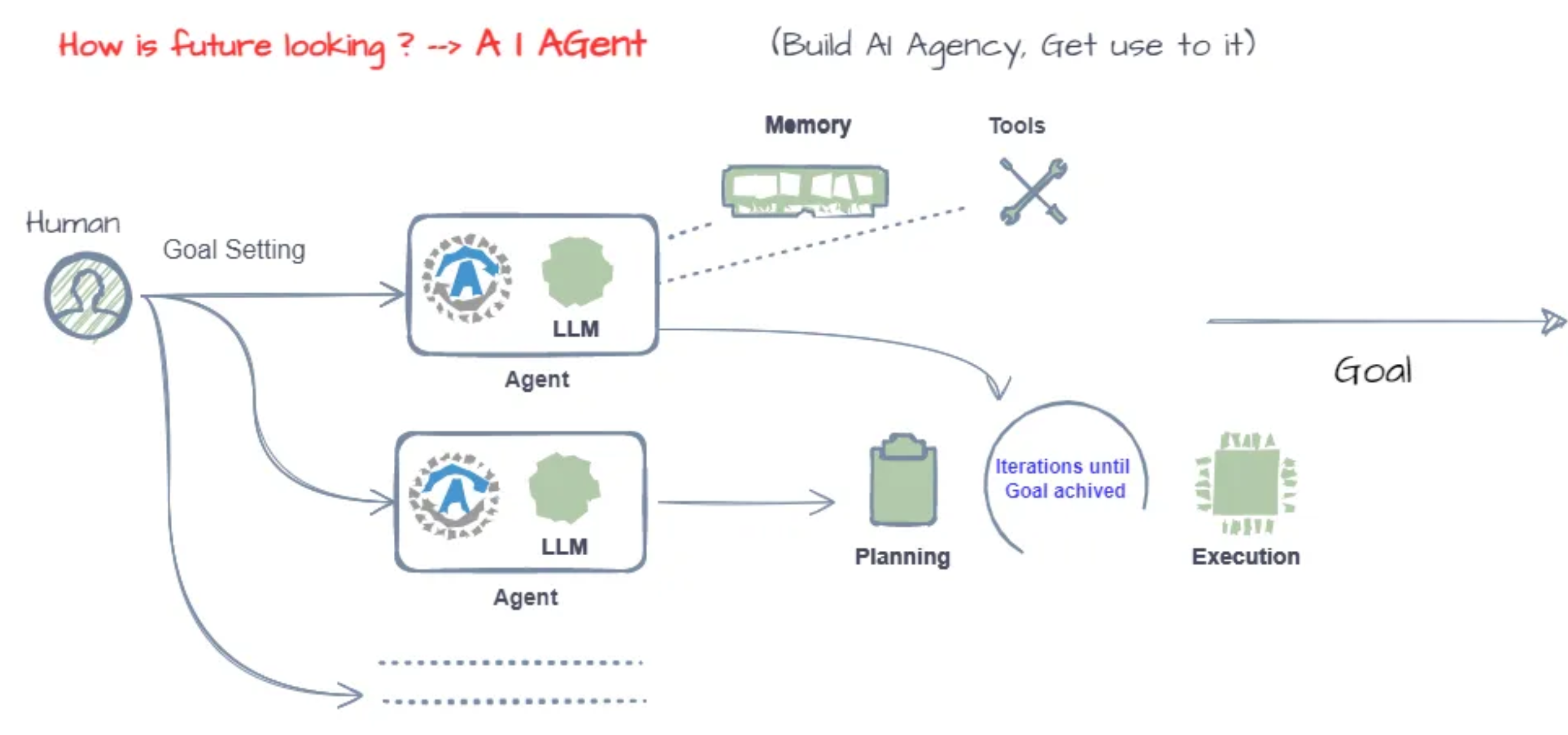

AI agents represent a massive step toward intelligent, autonomous, and multi-modal systems working alongside skilled humans (and replacing unskilled workers) in a wide variety of scenarios.

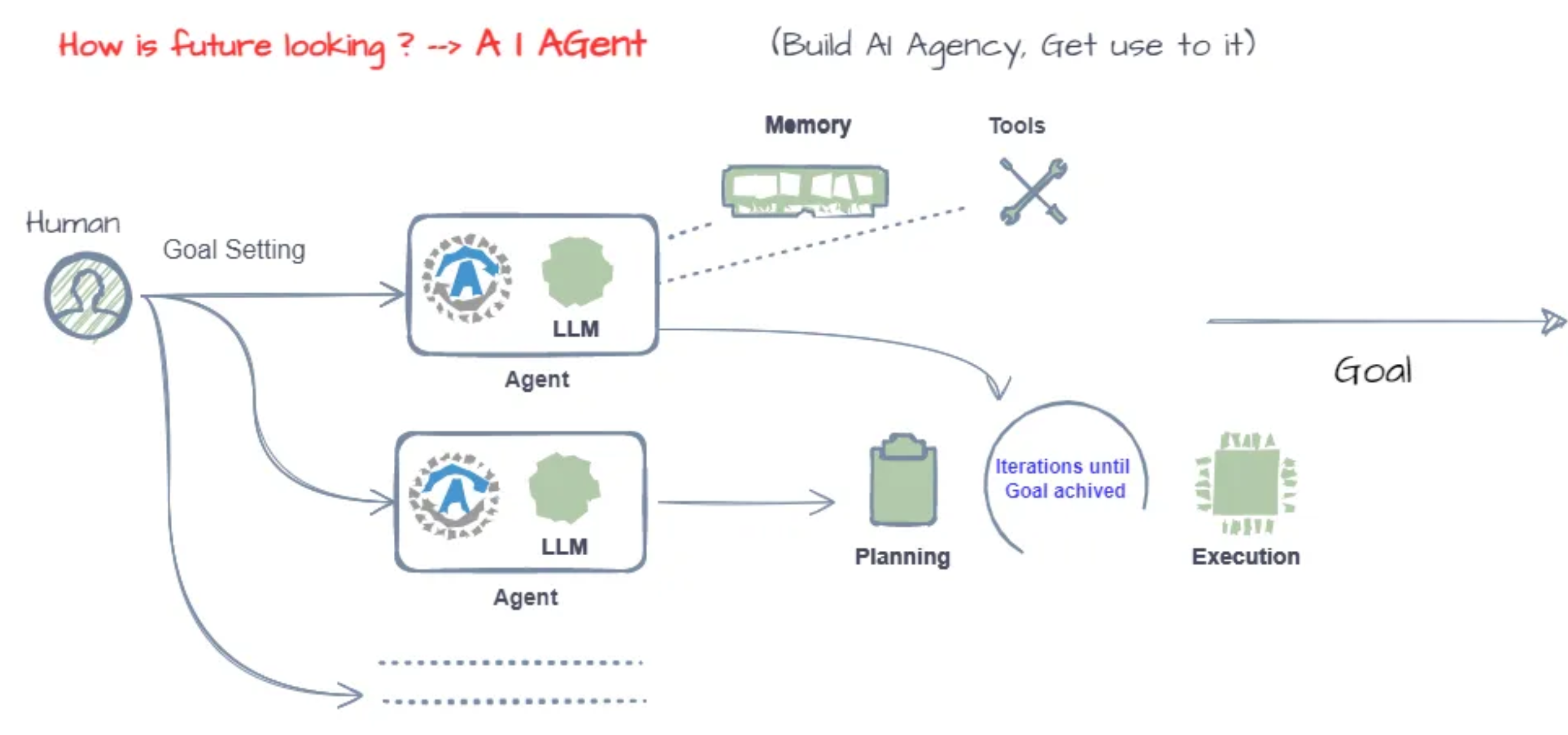

Eventually, these agents will be able to understand, learn, and solve problems without human intervention. There are a few critical improvements necessary to make that possible.

- Flexible goal-oriented behavior

- Persistent memory & state tracking

- Knowledge transfer & generalization

- Interaction with real-world environments

As models become more flexible in understanding and accomplishing their goals and begin to apply that knowledge to new real-world domains, models will go from intelligent-seeming tools to powerful partners with the ability to handle multiple tasks like a human would.

While they won't be human (or perhaps even seem human), we are on the verge of a technological shift that is a massive improvement from today's chatbots.

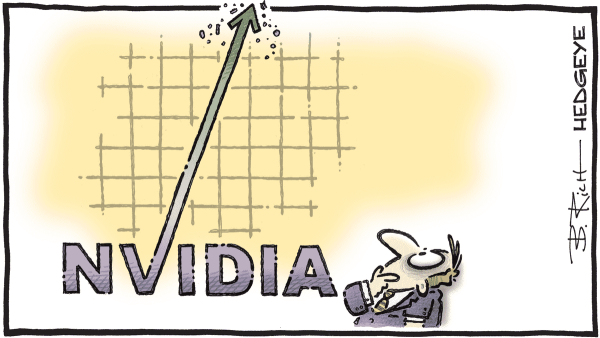

I like to think of these agents as the new assembly line. The assembly line revolutionized the workforce and drove an industrial revolution, and I believe AI agents will do the same.

As technology evolves, improvements in efficiency, effectiveness, and certainty are inevitable. For example, with a proverbial army of agents creating, refining, and releasing content, it is easy to imagine a process that would take multiple humans a week getting done by agents in under an hour (even with human approval processes).

To make it literal, imagine using agents to write this article. One agent can be skilled in writing outlines and crafting headlines. Another could focus on research and verification of research. Then you have an agent to write, an agent to edit and proofread, and a conductor agent who makes sure that the quality is up to snuff, and replicates my voice. If the goal was to make it go viral, there could be a virality agent, an SEO keyword agent, etc.

Separating the activities into multiple agents (instead of trying to craft a vertical integrative agent) reduces the chances of "hallucinations" and self-aggrandization. It can also theoretically wholly remove the human from the process.

via Aniket Hingane

via Aniket Hingane

Now, I enjoy the writing process. I'm not trying to remove myself from this process. But, the capability is still there.

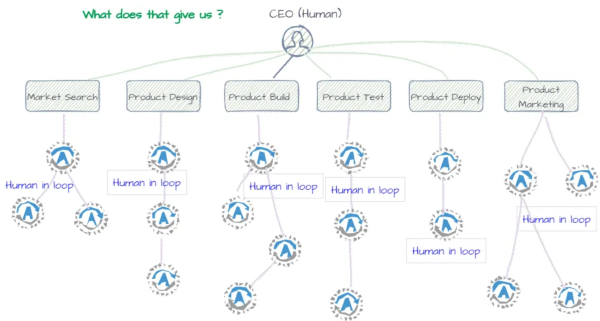

As agentification increases, I believe humans will still be a necessary part of the feedback loop process. Soon, we will start to see agent-based companies. Nonetheless, I still believe that humans will be an important part of the workforce (at least during my lifetime).

Another reason humans are important is because they are still important gatekeepers … meaning, humans have to become comfortable with a process to allow it.

Trust and transparency are critical to AI adoption. Even if AI excels at a task, people are unlikely to use it blindly. To truly embrace AI, humans need to trust its capabilities and understand how it arrives at its results. This means AI developers must prioritize building systems that are both effective and understandable. By fostering a sense of ease and trust, users will be more receptive to the benefits AI or automation offers.

Said a different way, just because AI can do something doesn't mean that you will use the tool or let AI do it. It has to be done a "certain" way in order for you to let it get done … and that involves a lot of trust. As a practical reality, humans don't just have to trust the technology; they also have to trust and understand the process. That means the person building the AI or creating the automation must consider what it would take for a human to feel comfortable enough to allow the benefit.

Especially as AI becomes more common (and as an increasingly large amount of content becomes solely created by artificial systems), the human touch will become a differentiator and a way to appear premium.

via Aniket Hingane

In my business, the goal has never been to automate away the high-value, high-touch parts of our work. I want to build authentic relationships with the people I care about — and AI and automation promise to eliminate frustration and bother to free us up to do just that.

The goal in your business should be to identify the parts in between those high-touch periods that aren't your unique ability – and find ways to automate and outsource them.

Remember, the heart of AI is still human (at least until our AI Overlords tell us otherwise).

Onwards!

via

via

via

via