GPT-3 was released by OpenAI in 2020 – and was considered by many a huge jump in natural language processing.

GPT stands for Generative Pre-trained Transformer. It uses deep learning to generate text responses based on an input text. Even more simply, it's a bot that creates a quality of text so high that it can be difficult to tell whether it's written by a human or an AI.

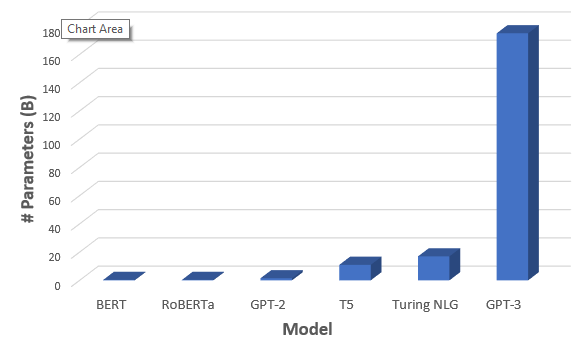

GPT-3 is 100x bigger than any previous language AI model and comes pre-trained on 45TB of training text (499 billion words). It cost at least 4.6 million US dollars (some estimated as high as $12 million) to train on GPUs. The resulting model has 175 billion parameters. On top of that, it can be tuned to your specific use after the fact.

Here are some interesting GPT-3 based tools:

- Frase – AI-Curated SEO Content

- Emerson – AI Chatbot

- Viable – Customer Feedback Analytics Platform

- Sapling– Customer Service

Practically, GPT-3 was a huge milestone. It represents a huge jump in NLP's capabilities and a massive increase in scale. That being said, there was a frenzy in the community that may not match the results. To the general public, it felt like a discontinuity; like a big jump toward general intelligence.

To me, and to others I know in the space, GPT-3 represents a preview of what's to come. It's a reminder that Artificial General Intelligence (AGI) is coming and that we need to be thinking about the rules of engagement and ethics of AI before we get there.

Especially with Musk unveiling his intention to build 'friendly' robots this week.

On the scale of AI's potential, GPT-3 was a relatively small step. It's profoundly intelligent in many ways – but it's also inconsistent and not cognitively concrete enough.

Take it from me, the fact that an algorithm can do something amazing isn't surprising to me anymore … but neither is the fact that an amazing algorithm can do stupid things more often than you'd suspect. It is all part of the promise and the peril of exponential technologies.

It's hard to measure the intelligence of tools like this because metrics like IQ don't work. Really it comes down to utility. Does it help you do things more efficiently, more effectively, or with more certainty?

For the most part, these tools are early. They show great promise, and they do a small subset set of things surprisingly well. If I think about them simply as a tool, a backstop, or a catalyst to get me moving when I'm stuck … the current set of tools is exciting. On the other hand, if you compare current tools to your fantasy of artificial general intelligence, there are a lot of things to be improved upon.

Clearly, we are making progress. Soon, GPT-4 will take us further. In the meantime, enjoy the progress and imagine what you will do with the capabilities, prototypes, products, and platforms you predict will exist for you soon.

Onwards.

via

via

Leave a Reply