We often talk about Artificial Intelligence's applications – meaning, what we use it for – but we often forget to talk about a more crucial question:

How do we use AI effectively?

Many people misuse AI. They think they can simply plug in a dataset, press a button, and poof! Magically, an edge appears.

Most commonly, people lack the infrastructure (or the data literacy) to properly handle even the most basic algorithms and operations.

That doesn't even touch machine learning or deep learning (where you have to understand math and statistics to make sure you use the right tools for the right jobs).

Even though this is the golden age of AI … we are just at the beginning. Awareness leads to focus, which leads to experimentation, which leads to finer distinctions, which leads to wisdom.

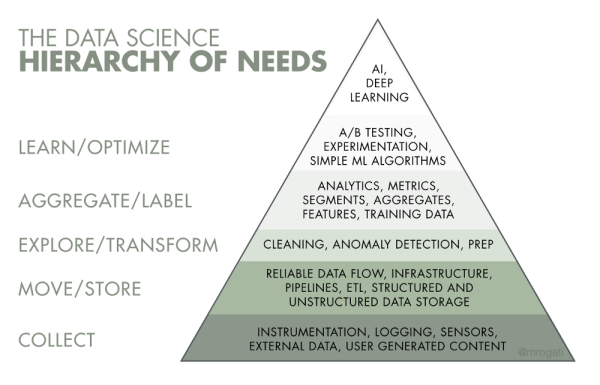

Do you remember Maslow's Hierarchy of Needs? Ultimately, self-actualization is the goal … but before you can focus on that, you need food, water, shelter, etc.

In other words, you most likely have to crawl before you can walk, and you have to be able to survive before you can thrive.

Artificial Intelligence and Data Science follow a similar model. Here it is:

First, there's data collection. Do you have the right dataset? Is it complete?

Then, data flow. How is the data going to move through your systems?

Once your data is accessible and manageable you can begin to explore and transform it.

Exploring and transforming is a crucial stage that's often neglected.

One of the biggest challenges we had to overcome at Capitalogix was handling real-time market data.

The data stream from exchanges isn't perfect.

Consequently, using real-time market data as an input for AI is challenging. We have to identify, fix, and re-publish bad ticks or missing ticks as quickly as possible. Think of this like trying to drink muddy stream water (without a filtration process, it isn't always safe).

Once your data is clean, you can then define which metrics you care about, how they all rank in the grand scheme of things … and then begin to train your data.

Compared to just plugging in a data set, there are a lot more steps; but, the results are worth it.

That's the foundation to allow you to start model creation and optimization.

The point is, ultimately, it's more efficient and effective to spend the time on the infrastructure and methodology of your project (rather than to rush the process and get poor results).

If you put garbage into a system, most likely you'll get garbage out.

Slower sometimes means faster.

Onwards.

Leave a Reply